Balancing AI Innovation with Rising Cyber Threats in Healthcare

The following is a guest article by Shane Cox, Director, Cyber Fusion Center at MorganFranklin Cyber Artificial intelligence is reshaping healthcare at an astonishing pace. From predictive diagnostics to robotic-assisted surgeries, AI’s potential to improve patient outcomes and streamline operations is undeniable. Yet, as hospitals, insurers, and providers embrace AI-driven automation, they also open the […]

The following is a guest article by Shane Cox, Director, Cyber Fusion Center at MorganFranklin Cyber

Artificial intelligence is reshaping healthcare at an astonishing pace. From predictive diagnostics to robotic-assisted surgeries, AI’s potential to improve patient outcomes and streamline operations is undeniable. Yet, as hospitals, insurers, and providers embrace AI-driven automation, they also open the door to an entirely new wave of cyber risks. AI is no longer just an asset; it is also a liability if not properly secured.

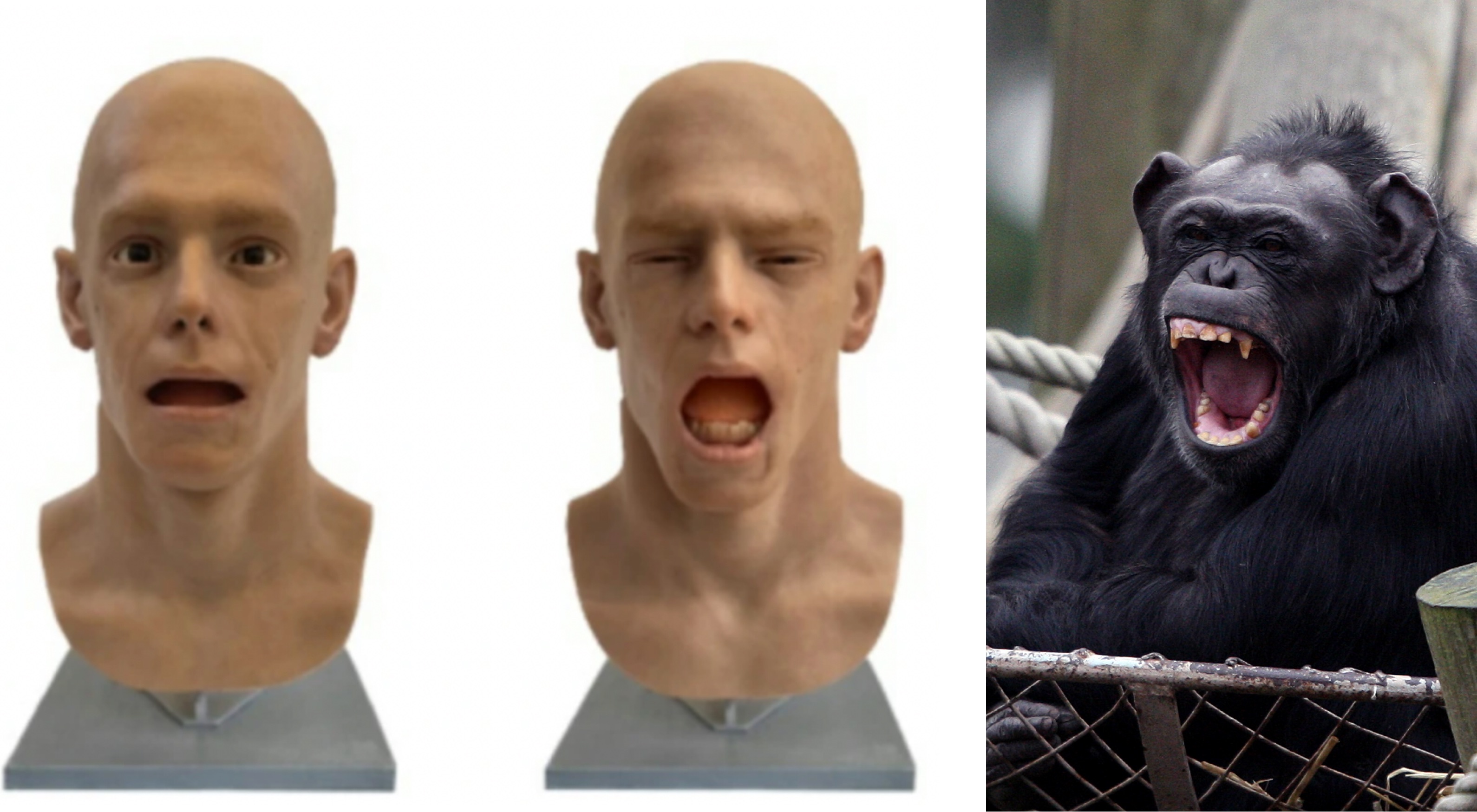

The same advancements that allow AI to analyze vast amounts of medical data and detect diseases faster than any human doctor can also be manipulated to launch sophisticated cyberattacks. Threat actors are already using AI to craft highly convincing phishing campaigns, generate deepfake voices to trick hospital administrators into fraudulent payments, and automate denial-of-service (DDoS) attacks that can cripple critical healthcare systems. In an industry where downtime can be the difference between life and death, the stakes have never been higher.

AI-Powered Threats Are Reshaping the Cybersecurity Landscape

The shift toward AI-driven cyberattacks is forcing healthcare security teams to play a game of cat and mouse at unprecedented speed. Traditional phishing scams were once easy to spot, riddled with typos and awkward phrasing. Now, AI can generate flawless, hyper-personalized phishing emails, mimicking the language, tone, and urgency of legitimate communications. Even security-aware employees struggle to tell the difference.

Deepfake technology has added another layer of deception. Imagine a hospital finance director receiving a call that sounds exactly like the CFO, authorizing an urgent wire transfer for a medical supply purchase. The voice is eerily familiar, the details check out, and the pressure to act quickly is high. But it’s not the CFO. It’s an AI-generated deepfake, convincing enough to bypass even the most skeptical professionals. These scenarios are no longer hypothetical. They are happening now.

At the same time, attackers are also deploying AI-driven botnets to launch highly adaptive DDoS attacks. These campaigns flood hospital servers with traffic from distributed sources, overwhelming systems and rendering them unusable. Modern botnets can learn and shift tactics in real time, evading traditional defenses. Hospitals running outdated security systems are especially vulnerable to these disruptions, which can delay life-saving procedures and compromise patient care.

The Inherent Risks in AI Itself

AI risks aren’t just limited to external attackers. When deployed hastily or without strong oversight, AI systems themselves can introduce new and overlooked vulnerabilities. Many healthcare organizations are embracing AI to enhance diagnostics, streamline workflows, and personalize treatment plans. But these models are only as good as the data they’re trained on. If a malicious actor gains access to the training dataset, they can manipulate outcomes through data poisoning, injecting corrupted or biased data that skews predictions and decision-making.

The consequences of such an attack can be severe. A tampered AI model might misdiagnose a patient, alter dosage recommendations, or prioritize treatments based on false variables. The potential for silent, systemic errors is a growing concern as AI becomes more deeply embedded in clinical decision-making.

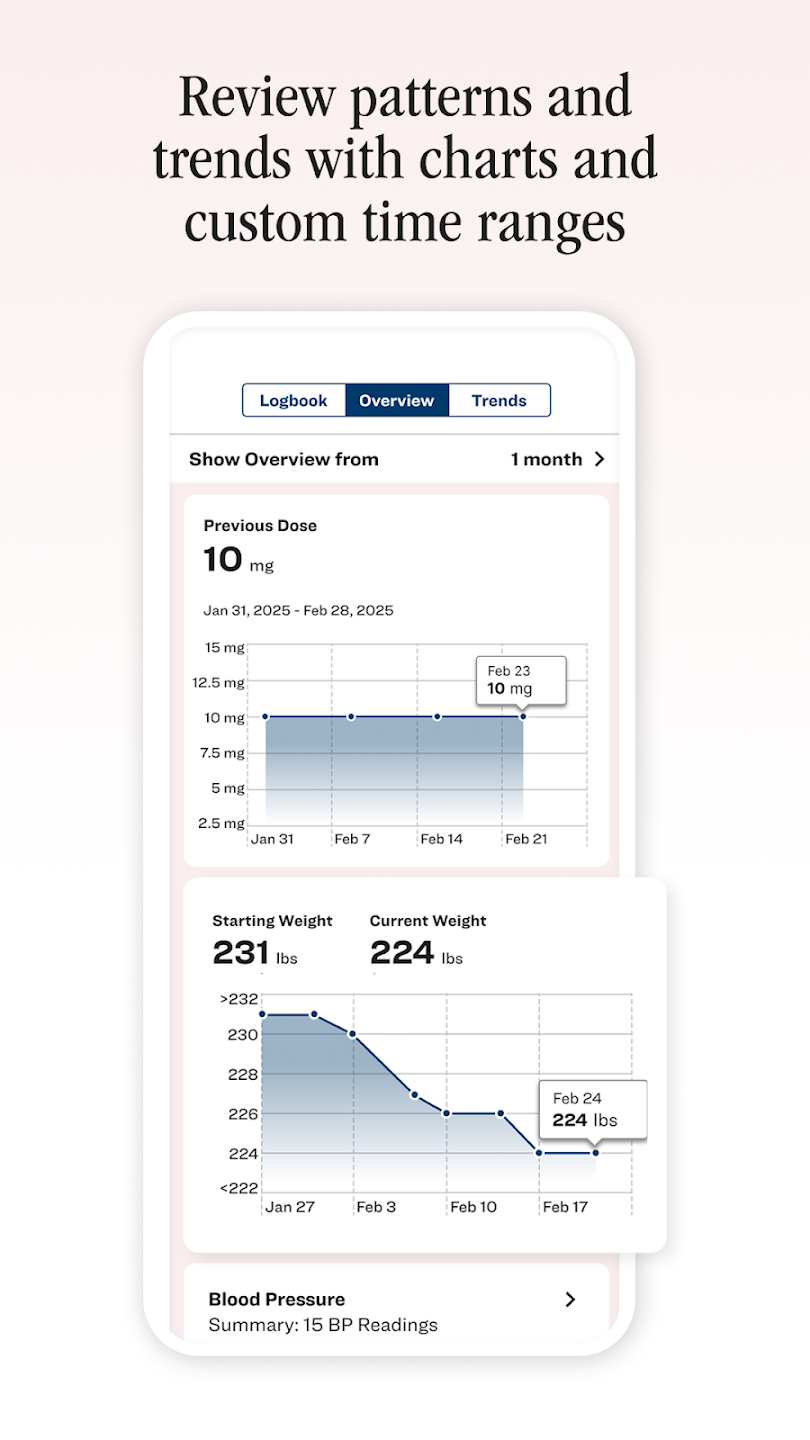

AI-powered chatbots and virtual assistants have also become commonplace for patient engagement, from scheduling appointments to offering symptom triage. These systems depend on APIs to communicate across platforms, and any misconfiguration can expose sensitive data. A single unsecured API can be an open door to attackers, compromising patient records, insurance information, and even clinical trial data.

The problem is compounded by the fact that many healthcare organizations lack visibility into the security posture of their AI vendors, and many providers integrate external AI tools without fully vetting their security protocols. This creates a fragmented and largely invisible ecosystem where AI applications operate beyond the reach of the hospital’s internal security team. When vendors lack strong security practices or when oversight is insufficient, this risk expands exponentially.

A New Approach to AI Governance and Defense

To mitigate these risks, healthcare security leaders must implement stricter AI governance policies and enforce rigorous third-party risk assessments before integrating AI-powered tools into their environments. AI solutions should undergo regular security audits, penetration testing, and API monitoring to ensure vulnerabilities are identified and patched before they can be exploited. Additionally, adopting zero-trust architectures can help ensure that third-party tools only access what they absolutely need, minimizing potential exposure.

For healthcare organizations, conventional security strategies are no longer enough. Defending against AI-powered attacks requires more than just upgrading traditional security tools. The entire security strategy must evolve. Managed Detection and Response (MDR) systems need to incorporate AI-driven anomaly detection that can distinguish between normal hospital automation and malicious AI behavior. Security platforms should become predictive, not just reactive, analyzing patterns and dynamically adjusting defenses in real time.

At the same time, Digital Forensics and Incident Response (DFIR) teams must rethink their investigative methods. The traditional approach of tracking digital footprints and identifying malware signatures is ineffective against self-learning, AI-generated threats. Security teams must develop advanced forensic capabilities to deconstruct AI-generated malware, trace deepfake fraud attempts, and counter AI-powered attack chains.

Putting Security in Lockstep with Innovation

AI security can no longer be an afterthought. It must be embedded into every phase of development and deployment, from initial design to daily operations. Security leaders and AI developers need to collaborate from the start, building systems with robust controls that prioritize patient safety and data integrity.

The future of healthcare depends on AI’s success, but its success depends on security. The industry must act now to build AI-powered defenses strong enough to withstand AI-powered attacks.

About Shane Cox

About Shane Cox

Shane Cox is the Director of Cyber Fusion Center and Incident Response at MorganFranklin Cyber. With over 20 years of experience in cybersecurity operations, Shane specializes in building and optimizing security teams and programs for detection and response, security platform management, EDR/MDR, threat intelligence, automation, incident response, and vulnerability management. At MorganFranklin, he is responsible for the strategic leadership, growth, optimization, and client satisfaction of Cyber Fusion Center services, and collaborates closely with cybersecurity leaders across various industry verticals.