What does ‘remastering’ an album actually mean?

Everything you wanted to know about (re-)mastering audio, but were afraid to ask. The post What does ‘remastering’ an album actually mean? appeared first on Popular Science.

“Digitally remastered!” Whether it’s another 10-disc Beatles box set just in time for the holiday season or a compilation of hip-hop classics on Spotify, relatively old songs will almost certainly come with the proclamation that the music has been remastered—and that as a result, they sound better than ever.

But what does “remastered” actually mean? And what does remastering a song involve? Popular Science investigates.

What is mastering?

To understand remastering, we first need to understand mastering. At its most basic level, mastering a record means quite literally creating a master copy of that record. This master is the source from which duplicates will be made. For a vinyl record, it’s the metal plate that’s used to stamp vinyl blanks with the grooves containing recorded information; for a cassette, it’s most likely a magnetic tape recording; and for digital releases, it’s a high-resolution audio file from which various other copies will be made.

Collin Jordan, a mastering engineer who runs his own mastering studio in Chicago, says that in the early days of recorded music, creating the master was a menial endeavor: “The job was usually given to the second-newest person on staff,” he laughs. “The newest person would sweep up and make coffee, and if you stuck around long enough, you’d get kicked up to mastering.”

So how did mastering go from being unskilled make-work for the intern to being a highly skilled career? Jordan says the advent of what we call “creative mastering” today came in the 1970s, when “a couple of different started to say, ‘Hey, maybe if I add a little low end here or a little top end there, the result will just sound a little bit better to the end listener.’”

The results caught the ears of other bands, and suddenly people were queuing up to get the same treatment for their records. “Musicians [are] incredibly competitive,” Jordan says. “Y’know, ‘Hey, what did you do to that guy’s record? Can you do it to mine?’”

Naively, one might think that to make a record louder and heavier, you just turn everything up to 11. Doing so, however, would make an album sound awful. Sounds with too much amplitude will distort and degrade, and the careful balance between frequencies created by the mixing engineer will be lost. Even worse, adding too much volume to a vinyl record—especially in relatively low-bass frequencies—risks actually kicking the needle out of the record’s groove.

NYC-based audio engineer Joseph Colmenero explains that a record’s groove is a physical analogue of the recording’s waveform, and there’s only so much physical space for that waveform to take up. “If you put too much energy—too much volume or too much bass, like a kick drum or transients—into that groove,” he explains, “the needle can cross over into the groove next to it.”

Jordan concurs: “Sure, you can put a lot of bass on an [LP],” he says, “and high-end turntables will play it great, but the cheap ones will skip, yeah.” Having a brand new record start skipping was unlikely to go down well with the record-buying public, and Jordan says record companies generally opted to “master for the lowest common denominator. They weren’t cutting [records] for audiophiles; they were cutting it for the general public. If you go back and listen to, say, a [Led] Zeppelin recording or Beatles recording, there’s actually very little low end on them.”

Coaxing every last little bit of extra warmth and volume out of a record thus required being familiar with the limitations of both a given format and of the equipment on which the recording would be played—and also the knowledge of how to work within (and sometimes around) those limitations. Unsurprisingly, engineers who were able to do so became highly sought after.

From mastering to remastering

If mastering involves optimizing a recording’s sound for a given format, remastering involves taking an existing master and reapplying the mastering process to optimize that record’s sound for a new format.

The first slew of remasters hit the market during the 1980s and early 1990s, and their arrival was catalyzed by the rise of the compact disc. The popularity of the new format presented record companies with the question of what to do with their back catalogues—albums originally released on vinyl that customers were now looking to repurchase on CD.

Technically, releasing analogue music on CD necessarily involves remastering, insofar as it requires making a digital version of the original analogue master. However, the earliest CD releases of pre-digital records were what Jordan calls “flat transfers”—they came straight from the existing master, with no alterations or tweaks to accommodate the new format.

Compared to records mastered for release on CD, however, these older albums could sound relatively quiet and listless. As with anything related to music, of course, this is subjective, and some people prefer the sound of these original CD transfers. However, the contrast they formed with the bright, bold sound of digital audio led record companies to start asking audio engineers to adjust the digital masters to utilize the expanded possibilities of the new format.

Of course, one man’s optimization is another man’s butchery, and the decisions made during remastering can cause controversy amongst fans used to the original sound of their favorite record. Jordan points to the notorious 1997 remix/remaster of The Stooges’ Raw Power as an example: The original version of the record was largely seen as muddy and badly mixed, so fans were excited for the thought of a remastered version that would iron out the kinks of the original and let them hear the album as it was meant to be.

“How it was meant to be” is also subjective, and the results of the Raw Power remaster were polarizing–and remain so today. Jordan says it’s a demonstration of how you can’t please everyone; pretty much any remastering project will inevitably generate impassioned discussion and detailed comparisons to the original. “It’s tricky,” he says, “because you’re going to get criticism on either side. Some people are going to say, ‘You did too much…you ruined my favorite record by making it sound too modern.’ Other people are going to say, ‘You didn’t do enough…his is no improvement [on] the original version.’ I’ve heard both [things] on the same album.” He laughs. “What are you supposed to do with that?”

Mastering in the digital age

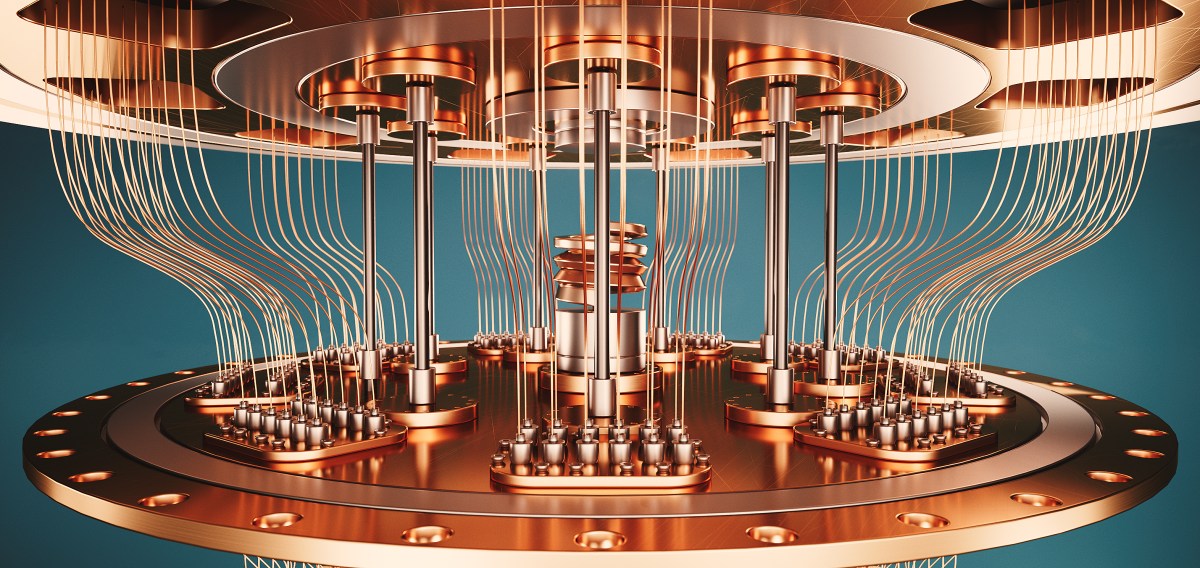

In the digital age, masters are no longer physical objects; instead, they’re digital files from which distribution copies are made. They’re the files from which the songs you buy on a CD, or download from Bandcamp, or stream via your streaming service of choice, are copied.

Digital formats place far fewer constraints on a mastering engineer than their analogue predecessors. On the whole, Colmenero says, in comparison to analogue formats, “Digital is very forgiving.” Even so, these formats have their limitations, and the fundamental challenge of mastering remains the same: “You master to the restrictions of the format,” says Colmenero.

A CD, for instance, can only contain so much data. This means that early on, Sony had to decide on several parameters for CD audio: how frequently the analogue signal would be sampled to create a digital version of the audio waveform (the sample rate), how much data each sample would hold (the sample’s bit depth), and how much of that data would be read by the CD player every second (the bit rate). For CD audio and most other digital audio, the sample rate is 44.1 kHz, meaning the analogue sound is sampled 44,100 times per second. A CD’s bit rate is 1411 kilobits per second, but formats like MP3 use compression algorithms and lower bit rates to save on space. This meant early MP3s, which had bit rates as low as 64 kbps, were often sonically pale shadows of the CDs from which they were ripped.

Today, the music you buy digitally comes in much higher quality, but data and speed constraints mean that streaming services still provide music with relatively low bit rates, albeit in more sophisticated and efficient formats than the MP3s you may or may not have found on Limewire in 2001. Spotify’s free tier provides music as 128 kbps AAC, while Apple Music uses a proprietary format based on the AAC specification.

These constraints mean that mastering engineers still have their work cut out for them, especially given that, as Colmenero says, streaming services also like everything in their catalogues to play nicely together, with no sudden volume jumps or radically different transitions in sound between tracks. That’s no small feat when a playlist algorithm might pair an ultra-loud modern track with a song that was recorded and mastered decades earlier. To mitigate such issues, the biggest players in the streaming world maintain their own mastering standards—Apple, for instance, offers a certification called “Apple Digital Masters” (formerly “Mastered for iTunes”), aimed at ensuring music meets the optimum standards for distribution in its streaming format.

As well as the challenges of mastering for streaming, there’s also an ever-increasing demand for immersive audio, driven (to some extent, at least) by the popularity of home theaters. Conventional stereo sound makes use of the stereo field, a virtual two-dimensional space that traces a 180° arc from the listener’s left ear around their face to their right ear. This is created by the use of two separate channels—left and right—when recording. The amount of a given sound that comes through each channel, along with the temporal separation between these sounds, creates the illusion that sounds are coming from various places within this field.

The effect can be used to emulate the experience of, say, seeing a band live: The vocals and drums come from the center, with the bass close by, while one guitar comes from the left and the other from the right. It can also be used to create sounds that seem to move from left to right or vice versa, like the iconic chopper at the beginning of Metallica’s “One.”

Spatial audio extends this concept to include a third, vertical dimension, with the aim of enabling full three-dimensional surround sound. The relative novelty of this technology means that there are still competing standards, but both leading standards—Dolby Atmos and DTS:X—are object-oriented, which means they work by mapping each sound in a recording to a specific location in a three-dimensional field: a virtual sphere centered on the listener.

Such ambitious standards also create a new challenge for mastering engineers. Colmenero says that careful consideration is required to master a record so it plays well on complicated surround systems while also sounding good on a conventional stereo.

Ultimately, though, Colmenero said, today songs get remastered “because [a record company] wants to introduce the catalog to streaming, or YouTube, or Atmos—or because you want to introduce the music to a new audience.”

Investigating the dark arts

Whatever the context, both mastering and remastering have the reputation in the music industry of being something of a dark art—a highly specialized skill best left to the handful of people who understand its secrets. So what does mastering—or remastering—a record actually involve? “I answer this question every day,” Jordan smiles, “because nobody knows what [mastering] is. I’ll have some band here and they’ll be like, ‘I thought we were done. Why are we paying this guy?’”

The answer, Jordan says, is “actually exceedingly simple. The technical explanation would be to take the stereo mixdown that was done in the mixing session and do some processing on it to try to make it sound as good as it can.”

It’s important here to distinguish between mixing and mastering. Mixing involves balancing the levels and frequencies of the various tracks that make up a multi-track recording; one track might contain vocals, another the guitar, another the keyboards, and so on. Mastering, by contrast, is done once mixing is complete, and it’s applied to the recording as a whole, rather than each individual component.

The main tools used in mastering are equalization (usually referred to as EQ) and compression. EQing allows for the adjustment of specific frequency bands, like a more sophisticated and flexible version of the “treble” and “bass” knobs on a car stereo. Compression, meanwhile, basically evens out the quietest and loudest parts of a signal, bringing up the volume of the quieter parts without distorting the rest.

Jordan compares the process to color correction for film: “You’re taking a [finished] movie—it’s made and it’s done and it’s edited and all that stuff—but you’re just making it look a little better in the theater or at home, maybe shifting the colors to make the film a little redder and warmer, or kind of a little bluer and brighter, something like that. This is extremely similar to what I’m doing: things kind of sound warmer and richer, or kind of cleaning things up and giving them a little more clarity.”

While the concept is simple, in practice it requires care and patience. “It’s simple but subtle,” Jordan says. “It’s just that I’m being insanely careful.” Part of the need for such care comes from the fact that working with the entire mix is a constant balancing act: “It’s very easy to, say, chase after the snare drum and try to make it sound better. And you get so focused on the snare drum that it’s only later you back up and realize, ‘Oh, we ruined the vocal.’”

As well as understanding how different parts of a mix interact, much of the skill of mastering comes from understanding the nuances and quirks of human hearing. Take the question of how loud we perceive a sound to be. In theory, we can look at a song’s waveform and conclude that if the amplitude of Part A is higher than the amplitude of Part B, we’ll hear the former as being louder than the latter.

In reality, however, there are many factors that influence our perception of loudness. One of these is a sound’s length. Jordan says that a sound that plays for less than 300 milliseconds is perceived as being quieter than a longer sound of the same volume. “You can prove this to yourself,” he says. “If you have [any sort of music software], you can take a recording of a snare drum and have it hit a certain [volume] level, and then have a guitar that peaks at the same level, and the guitar will sound a million times louder than the snare.”

There’s also the question of where a given sound sits in the stereo field. “I do a lot of work in hip hop,” Colmenero says, “and things in mono can feel louder.” For that reason, he says, producers looking for a drum sound that punches hard will often keep that sound centred in the stereo field. A mono sound, he says, ensures that “your speakers are working together to really push the sound out.”

Experienced engineers have accumulated decades’ worth of knowledge of these sorts of subtleties. As well as working with sounds that are there, some techniques deliberately remove sounds from a mix, relying on the listener’s brain to fill in the gaps. These involve “clipping” a signal, deliberately driving it to a level higher than output devices can handle. To avoid damaging a listener’s speakers, the loudest parts of the waveform are literally clipped away, leaving a truncated wave that is often audibly distorted.

Generally, this is something to avoid, but in the hands of an engineer who knows what they’re doing, it can also be used to artistic effect. “You might have a smooth waveform, like a sine wave,” Colmenero says. “If you clip that wave, then you end up with more of a square or sawtooth pattern—and our brains perceive sawtooth and square waves as having an extra harmonic. So in shaving the edges off that natural tone, you’re creating a more complex waveform, and a more complex waveform then gives you extra tones to work with.”

Mastering for the future

Given its origins in working around the restrictions of analogue audio, one might think mastering to be a dying art. Not so, says Jordan. While the days of having to make sure the needle stays in the groove are largely behind us, he says mastering remains as important as ever in 2025. One reason for this is, perhaps counter-intuitively, the democratization of recording and mixing. Even a generation ago, recording music meant booking time in an expensive studio. Today, though, even a basic free-to-download digital audio workstation software offers the sort of functionality that used to be the preserve of professional studios.

“It used to be that people were going to professional studios to make an album,” Jordan says. “Now they’re making something in their bedroom, and they’re doing it on headphones, or janky little [computer] speakers.” The problem with this is that a recording that you’ve gotten to sound great in your bedroom or your headphones might well sound terrible in another context. “I use the fun house mirror as a metaphor,” Jordan says. “They’ve been looking at the audio through an incredibly distorted fun house mirror, [and] the system I have is as clear as possible, so we can hopefully see what’s going on.”

As a result, Jordan says, he’s busier than ever. “Don’t get me wrong,” he says. “It’s very specialized, and the joke is always that there are more astronauts than there are mastering engineers. But yeah, the room is the thing—more so even than the amps and the speakers—and that’s only become more important as people have moved away from the traditional studio.” He laughs. “I got lucky to be in an expanding field.”

This story is part of Popular Science’s Ask Us Anything series, where we answer your most outlandish, mind-burning questions, from the ordinary to the off-the-wall. Have something you’ve always wanted to know? Ask us.

The post What does ‘remastering’ an album actually mean? appeared first on Popular Science.

.jpg)

.jpg)