Takeaways from the DeepSeek-R1 model

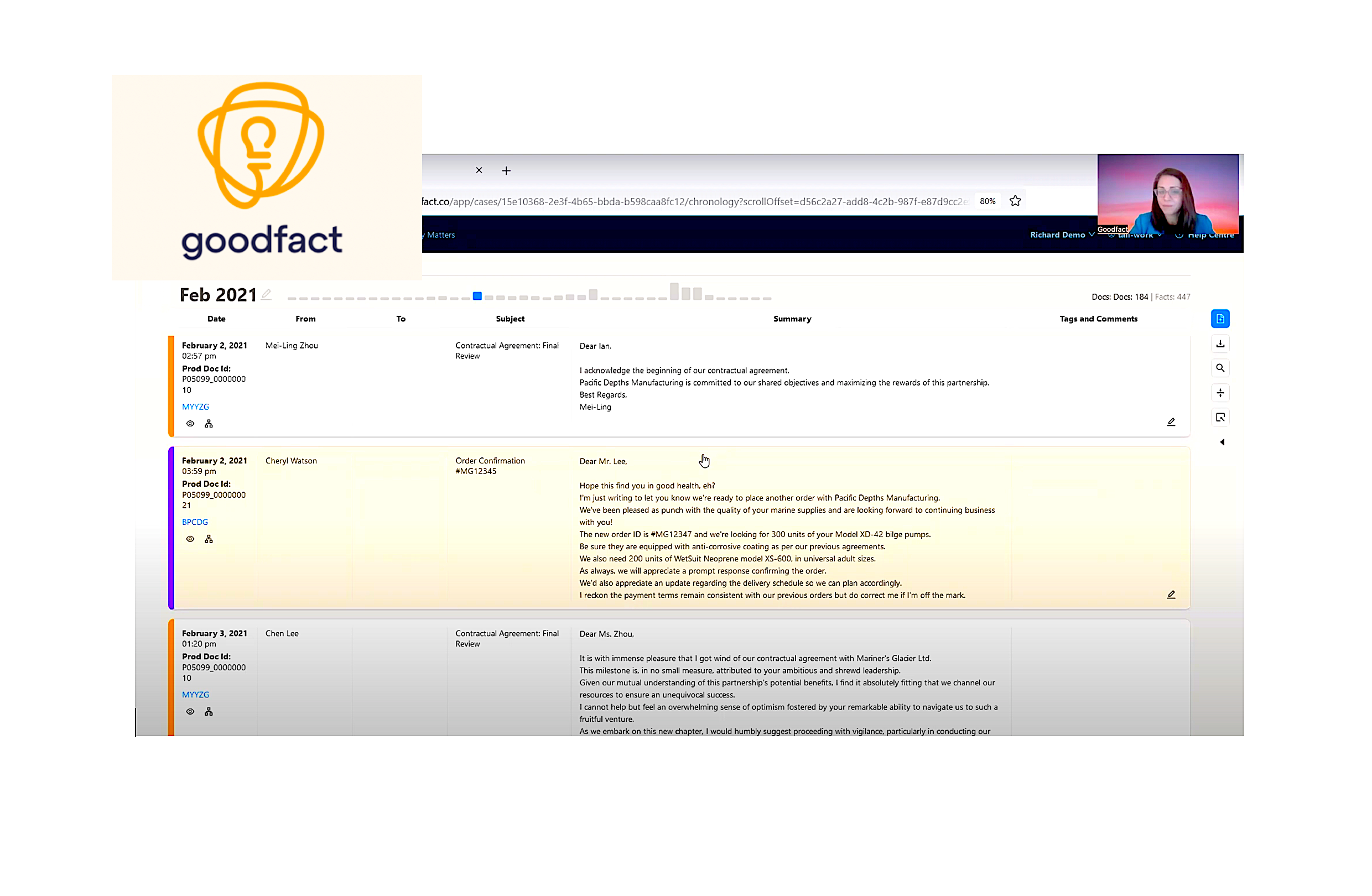

For software teams working with AI, the challenge has always been balancing capability with practicality. Recent ideas seem to improve on both of those sides. Here are a few takeaways from yesterday's DeepSeek-R1 model launch that provide new perspectives. Reasoning models Reinforcement learning (RL), a technique where models learn by trial-and-error, can transform base AI systems into skilled problem solvers. Unlike traditional approaches that rely on pre-labeled datasets, RL-based post-training lets models self-improve through algorithmic rewards. The GRPO algorithm (Group Relative Policy Optimization), first introduced with DeepSeekMath and used for DeepSeek-R1, streamlines RL by eliminating a key bottleneck: the “critic” model. Traditional RL methods like PPO require two neural networks—an actor to generate responses and a critic to evaluate them. GRPO replaces the critic with statistical comparisons. For each problem, it generates multiple candidate solutions, then calculates rewards relative to the group’s average performance. Here's an example of GRPO in action, designed to show how it evaluates and improves model responses: Prompt: "Solve for x: 2x + 3 = 7" Step 1 – Generate multiple responses GRPO samples a few responses (for example, 3) from the current model: Response Output 1 Subtract 3: 2x = 4 → x = 2. 2 2 Subtract 3: 2x = 7 → x = 3.5. 3.5 3 2x + 3 = 7 → 2x = 4 → x = 2. 2 Step 2 – Calculate rewards GRPO uses rule-based rewards: Accuracy reward: +1 if answer is correct (2), 0 otherwise. Format reward: +1 if / tags are used properly. Response Accuracy Reward Format Reward Total Reward 1 1 1 2 2 0 1 1 3 1 1 2 Step 3 – Compute relative advantages GRPO calculates relative advantages using group statistics: Mean: (2 + 1 + 2) / 3 = 1.67 Standard Deviation: 0.47 Response Advantage Formula Advantage 1 (2 - 1.67) / 0.47 +0.7 2 (1 - 1.67) / 0.47 -1.4 3 (2 - 1.67) / 0.47 +0.7 Step 4 – Update the model GRPO adjusts the model’s policy using these advantages: Reinforce: Responses 1 and 3 (positive advantage) get "boosted." Penalize: Response 2 (negative advantage) gets "discouraged." After the GRPO update, the model learns to: Avoid mistakes (like 2x = 7). Prefer correct steps (2x = 4 → x = 2). Maintain proper formatting ( and tags are preserved). This example shows how GRPO iteratively steers models toward better outputs using a lightweight but effective approach: simple comparisons. Without a critic model, there is a significant reduction in memory and compute usage. Small models, big impact Another key insight challenges the “bigger is better” assumption: Applying RL directly to smaller models (for example, 7B parameters) yields limited gains. Instead, you can achieve better results by: Training large models (for example, with GRPO) Distilling their capabilities into smaller versions The DeepSeek-R1 distilled 7B model outperformed many 32B-class models on reasoning tasks while requiring far less compute. Interestingly, this mirrors the software engineering principle to build a robust “reference implementation” first and then optimize it for production. Code training matters Looking at the DeepSeekMath paper that introduced GRPO, there is another interesting insight: models pre-trained on code improve reasoning, for example, to solve math problems. Code’s structured syntax appears to teach skills transferable to broad topics such as solving equations or logic puzzles. Conclusion The DeepSeek-R1 launch underscores how innovation in AI training can bridge the gap between performance and practicality. By replacing traditional RL’s resource-heavy “critic” with GRPO’s group-based comparisons, teams can streamline model optimization while maintaining accuracy. Equally compelling is the success of distilling large RL-trained models into smaller, efficient versions—a strategy that mirrors proven software engineering practices. Finally, the link between code pre-training and reasoning highlights the value of cross-disciplinary learning. Together, these insights offer a roadmap for developing AI systems that are both capable and cost-effective, balancing cutting-edge results with real-world deployment constraints.

For software teams working with AI, the challenge has always been balancing capability with practicality. Recent ideas seem to improve on both of those sides. Here are a few takeaways from yesterday's DeepSeek-R1 model launch that provide new perspectives.

Reasoning models

Reinforcement learning (RL), a technique where models learn by trial-and-error, can transform base AI systems into skilled problem solvers. Unlike traditional approaches that rely on pre-labeled datasets, RL-based post-training lets models self-improve through algorithmic rewards.

The GRPO algorithm (Group Relative Policy Optimization), first introduced with DeepSeekMath and used for DeepSeek-R1, streamlines RL by eliminating a key bottleneck: the “critic” model.

Traditional RL methods like PPO require two neural networks—an actor to generate responses and a critic to evaluate them. GRPO replaces the critic with statistical comparisons. For each problem, it generates multiple candidate solutions, then calculates rewards relative to the group’s average performance.

Here's an example of GRPO in action, designed to show how it evaluates and improves model responses:

Prompt: "Solve for x: 2x + 3 = 7"

Step 1 – Generate multiple responses

GRPO samples a few responses (for example, 3) from the current model:

| Response | Output |

|---|---|

| 1 | |

| 2 | |

| 3 | |

Step 2 – Calculate rewards

GRPO uses rule-based rewards:

-

Accuracy reward:

+1if answer is correct (2),0otherwise. -

Format reward:

+1if

| Response | Accuracy Reward | Format Reward | Total Reward |

|---|---|---|---|

| 1 | 1 | 1 | 2 |

| 2 | 0 | 1 | 1 |

| 3 | 1 | 1 | 2 |

Step 3 – Compute relative advantages

GRPO calculates relative advantages using group statistics:

-

Mean:

(2 + 1 + 2) / 3 = 1.67 -

Standard Deviation:

0.47

| Response | Advantage Formula | Advantage |

|---|---|---|

| 1 | (2 - 1.67) / 0.47 |

+0.7 |

| 2 | (1 - 1.67) / 0.47 |

-1.4 |

| 3 | (2 - 1.67) / 0.47 |

+0.7 |

Step 4 – Update the model

GRPO adjusts the model’s policy using these advantages:

- Reinforce: Responses 1 and 3 (positive advantage) get "boosted."

- Penalize: Response 2 (negative advantage) gets "discouraged."

After the GRPO update, the model learns to:

- Avoid mistakes (like

2x = 7). - Prefer correct steps (

2x = 4 → x = 2). - Maintain proper formatting (

This example shows how GRPO iteratively steers models toward better outputs using a lightweight but effective approach: simple comparisons. Without a critic model, there is a significant reduction in memory and compute usage.

Small models, big impact

Another key insight challenges the “bigger is better” assumption: Applying RL directly to smaller models (for example, 7B parameters) yields limited gains. Instead, you can achieve better results by:

Training large models (for example, with GRPO)

Distilling their capabilities into smaller versions

The DeepSeek-R1 distilled 7B model outperformed many 32B-class models on reasoning tasks while requiring far less compute. Interestingly, this mirrors the software engineering principle to build a robust “reference implementation” first and then optimize it for production.

Code training matters

Looking at the DeepSeekMath paper that introduced GRPO, there is another interesting insight: models pre-trained on code improve reasoning, for example, to solve math problems.

Code’s structured syntax appears to teach skills transferable to broad topics such as solving equations or logic puzzles.

Conclusion

The DeepSeek-R1 launch underscores how innovation in AI training can bridge the gap between performance and practicality. By replacing traditional RL’s resource-heavy “critic” with GRPO’s group-based comparisons, teams can streamline model optimization while maintaining accuracy.

Equally compelling is the success of distilling large RL-trained models into smaller, efficient versions—a strategy that mirrors proven software engineering practices.

Finally, the link between code pre-training and reasoning highlights the value of cross-disciplinary learning.

Together, these insights offer a roadmap for developing AI systems that are both capable and cost-effective, balancing cutting-edge results with real-world deployment constraints.

What's Your Reaction?