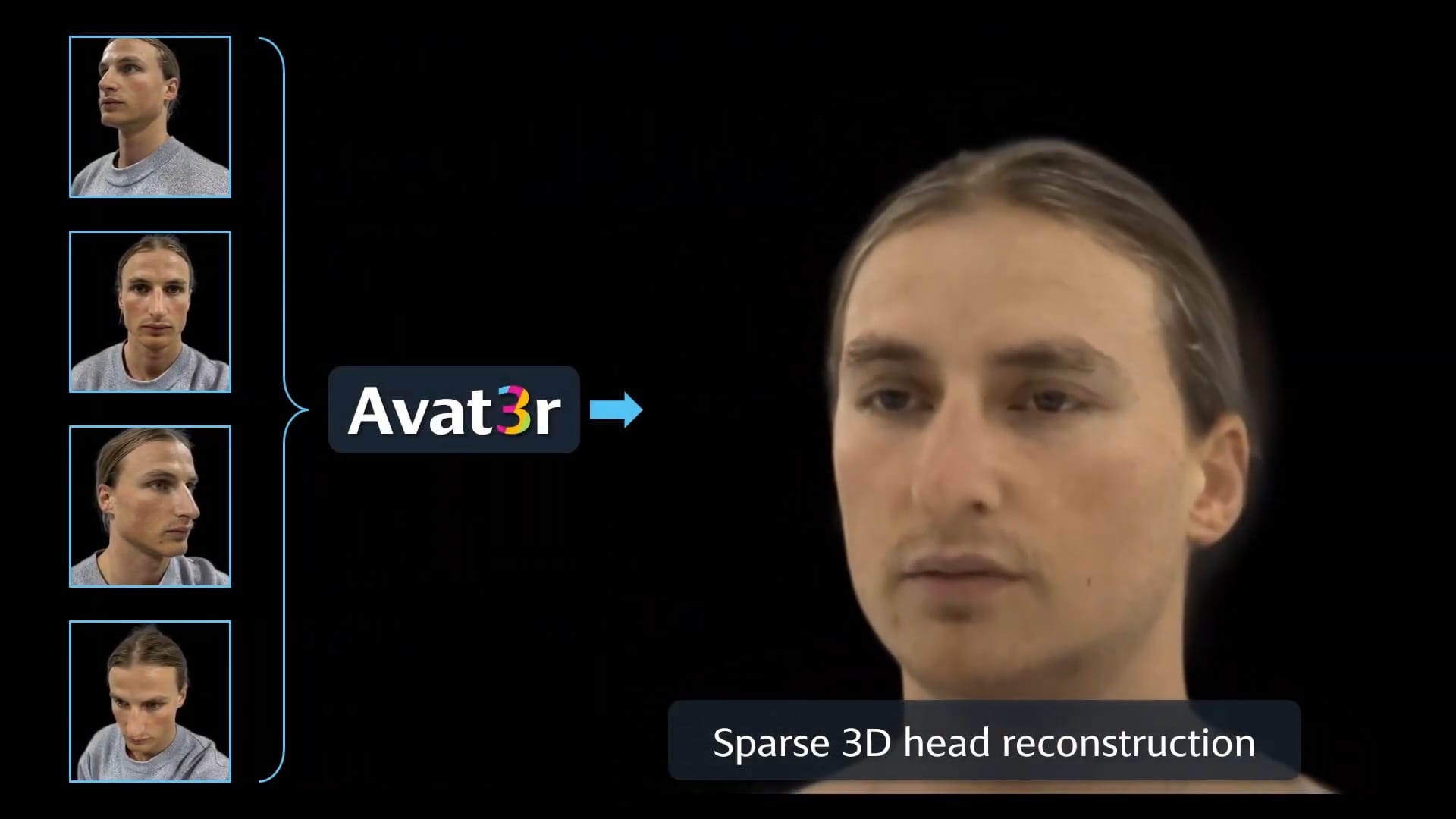

Meta Researchers Generate Photorealistic Avatars From Just Four Selfies

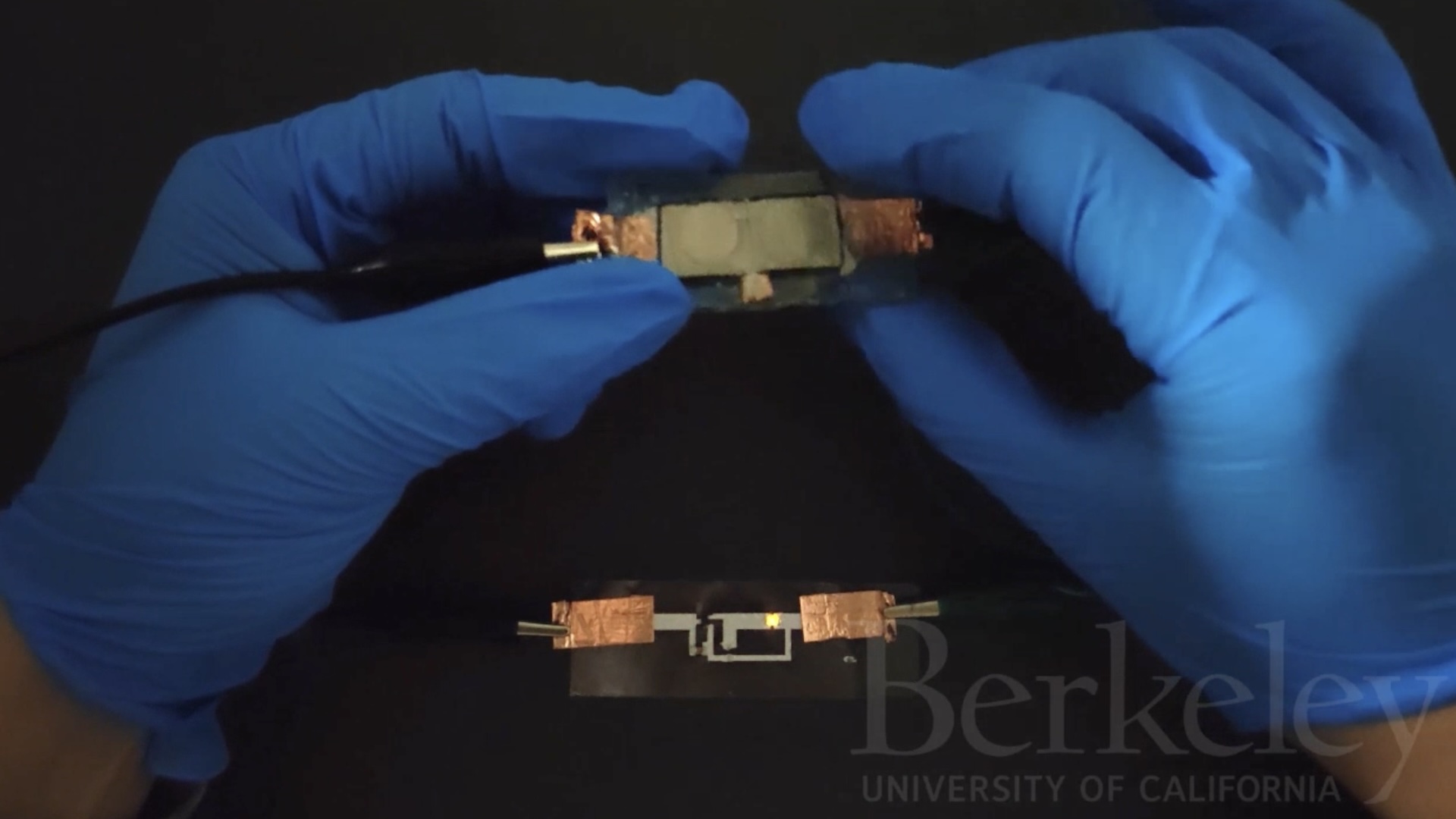

Meta researchers built a "large reconstruction model (LRM)" that can generate an animatable photorealistic avatar head in minutes from just four selfies.

Meta researchers built a "large reconstruction model (LRM)" that can generate an animatable photorealistic avatar head in minutes from just four selfies.

Meta has been researching photorealistic avatar generation and animation for more than six years now, and its highest-quality version even crosses the uncanny valley, in our experience.

One of the biggest challenges for photorealistic avatars to date has been the amount of data and time needed to generate them. Meta's highest-quality system requires a very expensive specialized capture rig with over 100 cameras. The company has shown research on generating lower quality avatars with a smartphone scan, but this required making 65 facial expressions over the course of more than three minutes, and the data captured took multiple hours to process on a machine with four high end GPUs.

Now, in a new paper called Avat3r, researchers from Meta and Technical University of Munich are presenting a system that can generate an animatable photorealistic avatar head from just four phone selfies, and the processing takes a matter of minutes, not hours.

On a technical level, Avat3r builds on the concept of a large reconstruction model (LRM), leveraging a transformer for 3D visual tasks in the same sense that large language models (LLMs) do for natural language. This is often called a vision transformer, or ViT. This vision transformer is used to predict a set of 3D Gaussians, akin to the Gaussian splatting you may have heard about in the context of photorealistic scenes such as Varjo Teleport, Meta's Horizon Hyperscapes, Gracia, and Niantic's Scaniverse.

The specific implementation of Avat3r's animation system isn't driven by a VR headset's face and eye tracking sensors, but there's no reason it couldn't be adapted to leverage this as the input.

However, while Avat3r's data and compute requirements for generation are remarkably low, it's nowhere near suitable for real-time rendering. According to the researchers, the end result system runs at just 8 FPS on an RTX 3090. Still, in AI it's common to see subsequent iterations of new ideas achieve orders of magnitude optimizations, and Avat3r's approach shows a promising path to one day, eventually, letting headset owners set up a photorealistic avatar with a few selfies and minutes of generation time.

.jpg)

![The breaking news round-up: Decagear launches today, Pimax announces new headsets, and more! [APRIL FOOL’S]](https://i0.wp.com/skarredghost.com/wp-content/uploads/2025/03/lawk_glasses_handson.jpg?fit=1366%2C1025&ssl=1)