Hands-on: Prophesee enables eye tracking with very low power consumption

At AWE, I have been able to go hands-on with a new way to perform eye tracking, which is with event cameras from Prophesee. What are event cameras? Before sharing my hands-on impressions, it’s a good idea to discuss what event cameras are. If this question does not sound new to you, it is because […] The post Hands-on: Prophesee enables eye tracking with very low power consumption appeared first on The Ghost Howls.

At AWE, I have been able to go hands-on with a new way to perform eye tracking, which is with event cameras from Prophesee.

What are event cameras?

Before sharing my hands-on impressions, it’s a good idea to discuss what event cameras are. If this question does not sound new to you, it is because last year, always at AWE US, I was already able to go hands-on with event cameras: not for eye tracking, but for hand tracking. It was Ultraleap that was proposing a very cool demo using them… its last demo before dying.

Since I have already written an article about it, I can happily copy-paste my definition of event cameras from there (which was already a copy-paste from Wikipedia… copypasteception!):

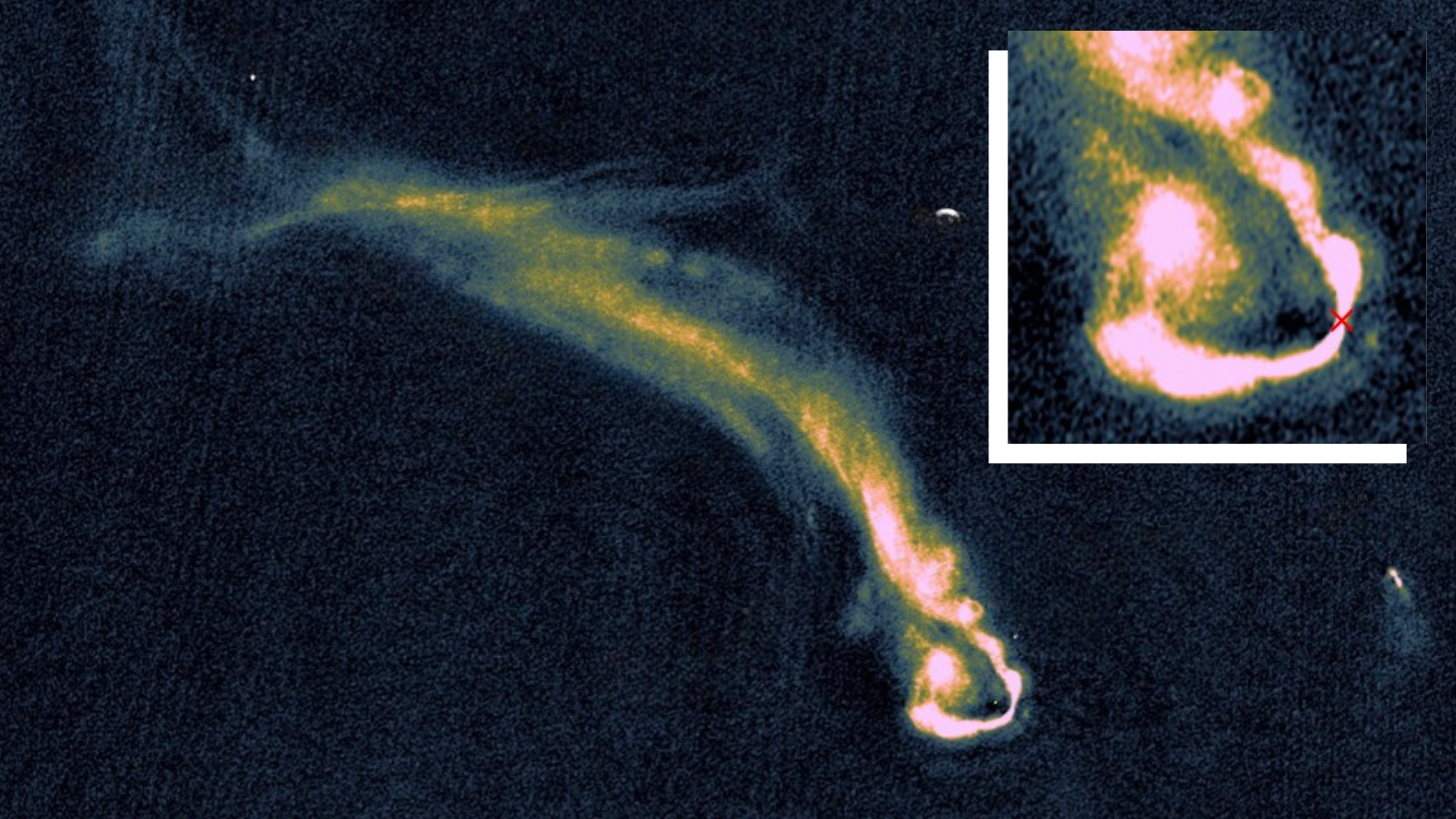

An event camera, also known as a neuromorphic camera, silicon retina or dynamic vision sensor, is an imaging sensor that responds to local changes in brightness. Event cameras do not capture images using a shutter as conventional (frame) cameras do. Instead, each pixel inside an event camera operates independently and asynchronously, reporting changes in brightness as they occur, and staying silent otherwise.

Event camera pixels independently respond to changes in brightness as they occur. Each pixel stores a reference brightness level, and continuously compares it to the current brightness level. If the difference in brightness exceeds a threshold, that pixel resets its reference level and generates an event: a discrete packet that contains the pixel address and timestamp. Events may also contain the polarity (increase or decrease) of a brightness change, or an instantaneous measurement of the illumination level, depending on the specific sensor model. Thus, event cameras output an asynchronous stream of events triggered by changes in scene illumination.

Long story short: an event camera does not capture an image frame like a standard camera, but it captures the difference between two consecutive frames.

One of the leading companies in making event cameras is Prophesee, and here below you can see a company video explaining event cameras and showing you what the output of this special type of cameras is:

Why use event cameras?

After this explanation, you may be pretty puzzled: why should you use a camera that doesn’t let you properly see what is happening, but is basically just showing you the areas of motion? Well, it turns out there are a few interesting reasons.

The first and most important reason is that its power consumption is ridiculously low. The amount depends on the camera: the one used by Ultraleap consumes 23mW, while the one for eye tracking consumes as low as 2mW (yes, MILLIWatts) when in use and 16µW (yes, MICROWatts) when in standby. We all know that one of the key points for having comfortable AR smartglasses is reducing power consumption to the minimum possible. Event cameras manage to do exactly that: they are incredibly power-efficient.

The other strong point that they have is that since they do not have to capture the full image on a big array of pixels, they can be very fast in capturing a frame. The Prophesee event cameras used for eye tracking have more than 1 KHz of framerate. Consider that with standard cameras, we are already happy with 60 Hz, and here we have 1000. This means that if you are fast enough with your algorithm, you can have the tracking latency that is under 1ms.

Then there is the privacy factor. Standard cameras, when used for eye tracking, reveal everything about your eye, and a malicious actor could use the image of your eyes to infer all the possible data about you. Since event cameras never fully capture the full image of your eye, they make it much more difficult to perform this operation. So you can have the detection of your eye movement, but in a privacy-safe way.

Hands-on eye tracking with Prophesee event cameras

When I arrived at the booth of Prophesee, I was given some glasses frames on which were installed the eye-tracking sensors. Since Prophesee has recently announced a collaboration with the famous eye-tracking company Tobii, I thought the system was made by Tobii. Etienne, the Prophesee employee at the booth, corrected me and said that the demo was made in collaboration with 7Invensun, the Chinese company that is a leader in eye-tracking technologies (they’re friends of mine, I even met them during my first trip to China).

The glasses were not a commercial product, but just some glasses frames with the eye-tracking sensors installed on them. I recognized a front camera on them, but this was not used for the tracking: it was useful for streaming the point of view of the user to a nearby PC, to show how the eye tracking was performing (more on this later). On the inside, there were two sensors, one for each eye. To be exact, for every eye there were two IR LEDs to illuminate the eye, and an event camera to capture the eye “images”. I guess that the sensors were provided by Prophesee, and 7Invensun developed the mount and all the software to perform the eye-tracking operation.

As usual, when doing eye tracking, I had to perform a calibration operation. Etienne used a piece of paper with a target on it: he moved it in 3 different positions in front of me, and asked me to look at it in these positions. After this very quick operation, the system was ready. I made many eye-tracking calibrations in my career, but it is the first time that I did it with a human actively moving the point to look (which was on a piece of paper!) in front of me. But hey, as long as it works…

After the calibration, I started looking at various things, like my index finger tip, my thumb, etc… and I could see that on the laptop mirroring my view, the orange dot of my eye position was always going where I was looking at. This proved that the system was working.

Prophesee eye tracking demo

It was pretty cool to see eye tracking work with this new type of hardware, and with such a small set of consumed resources. Unluckily, I can’t make any claim about the accuracy: the company says that the latency is lower than 1ms and the accuracy is less than 1 degree, but with such a quick and dirty test, it is impossible for me to say if this is true. I could have had a better taste of the quality if I were given an AR interface to look at, like the Vision Pro menu, but just a big orange dot on a mirrored image on a PC is not good to assess the quality (also because the mirroring itself adds latency). But I can say that, for what I was able to try, the system was working very well: it was reactive, and it was detecting the object I was looking at every time.

You can watch my full hands-on session, recorded at the Prophesee booth at AWE, in the video below

Final considerations

If I mix the results of this hands-on session at the Prophesee (+ 7Invensun) booth and the ones of the past year at the Ultraleap (+ Prophesee) booth, I can say I’m pretty intrigued by event cameras and the work that Prophesee is doing.

If companies are able to create tracking algorithms using them, we can have eye tracking and hand tracking on glasses with a very high framerate and a very low power consumption. And all of this while guaranteeing more privacy to the user. This is incredibly promising, it is exactly what we need to build the AR glasses of the future.

For sure, there are some shortcomings, and I guess that it is “easier” to perform tracking when you have all the data of the full image frames, but if the tracking algorithms using event cameras keep improving, they could start substituting the standard ones over time. I’ll for sure keep an eye (pun intended) on Prophesee in the upcoming months.

The post Hands-on: Prophesee enables eye tracking with very low power consumption appeared first on The Ghost Howls.