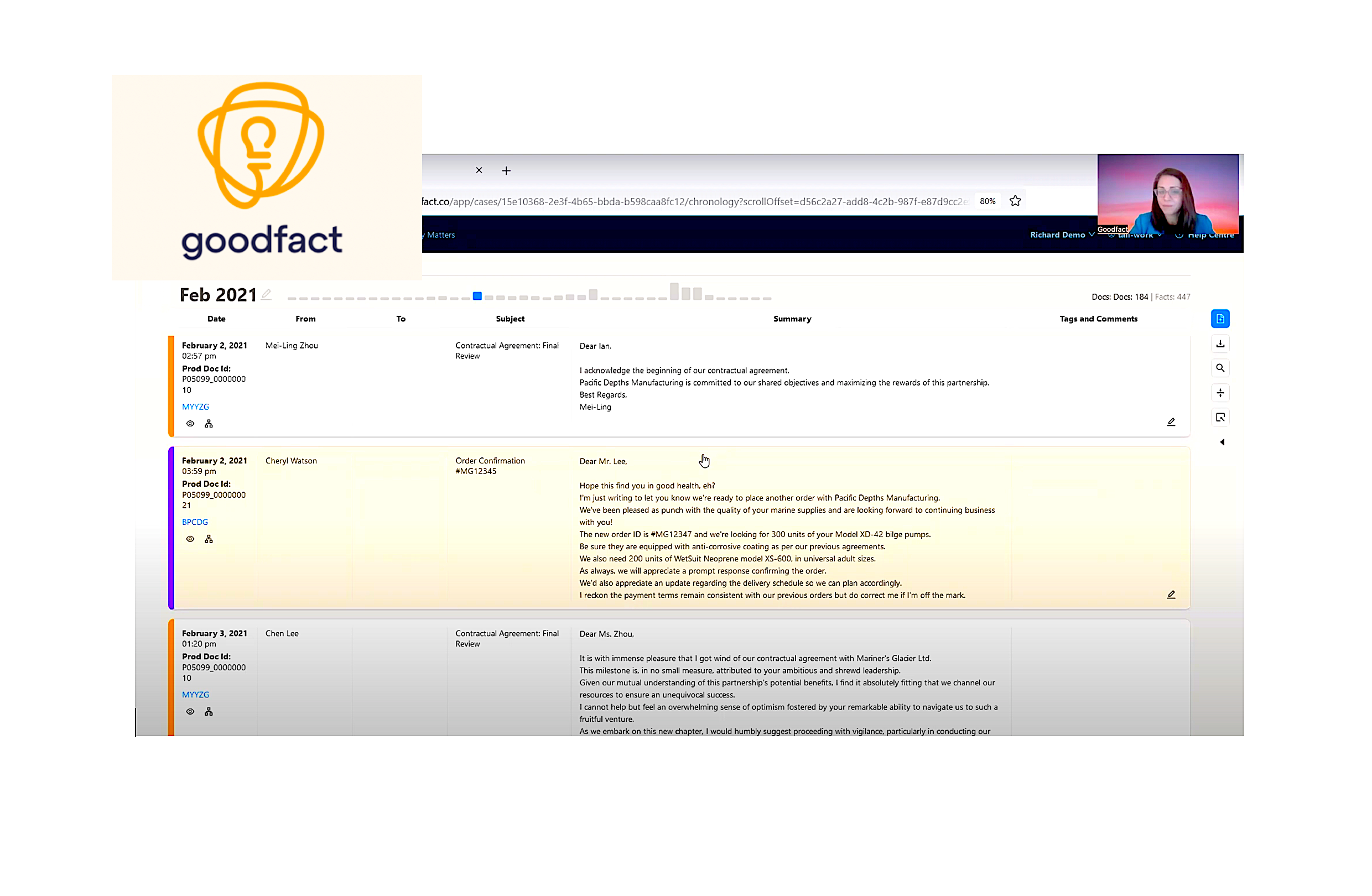

What I think will be the impact of AI to developer jobs

- or will AI replace software developers? First, let's address the elephant in the room. Neither I nor anyone else knows yet if AGI is possible. If it is and someone actually manages to build it, it's pointless to even spend any time thinking about the question. It would have such a big impact in all areas of society that it becomes pointless to evaluate the matter from just the perspective of the IT market. But yes, ours would probably be one the very first professions to vanish since the majority of our work happens in an environment that is purely digital and because software can be easily tested for validity (sometimes it can even mathematically proven to be right). I've seen many people a lot smarter than I am saying that perhaps AGI is possible just probably not with the LLM technology we are using today, so perhaps we still have a few years to go - I think Sabine Hossenfelder has some interesting takes on this, go check her videos on YouTube. With that cleared we can focus on more practical and actionable scenarios. If there's no AGI, then we first need to consider how far the LLM technology can go. Starting with hallucinations, although LLMs get better at this with every new iteration there will certainly be a limit to it. That's because hallucinations are intrinsic to this technology. The same thing that causes hallucinations is what made LLMs possible. The first consequence of this is that for any minimally relevant application we will need a person in the loop reviewing the work of the LLM. That is to reduce the chances it breaks applications leading to money loss or more severe consequences depending on the context. The other part of it is that this kind of AI works best when prompted to do the things which it was extensively trained on. "Build an iOS app for an airline company", "write a merge sort algorithm in Python". For these well known use cases it can yield excellent results even if you provide very little context. But if you want anything more specific or less usual you are going to need to write a prompt that is much more detailed than that. And will probably need a few rounds of iteration before you have a reasonable result. This again means we need someone working with the LLM. So while I do think we will always need developers reviewing the output of LLMs I also agree we will naturally need less and less of this as the technology evolves. In that sense we could say that there will be less jobs for developers and the ones available will be for senior positions. That was the "programming" side of the debate. We know writing code is just a part of the job. There's another significant chunk of it that is related to things like aligning expectations with stakeholders, understanding context and debating technical and product solutions. I don't see a LLM emailing the CEO of a company requesting clarification on a decision, or participating in a meeting with unprompted comments and opinions - someone will certainly build this, but I think we will quickly figure it's best to turn it off. The skills beyond writing code are a significant part of the job and they are not going away. We'll still need engineers balancing tradeoffs, negotiating priorities, managing risk and collaborating with users, and with people from other areas of the company. It's much less likely that LLMs will take on these things compared to their potential to write code. But it's also important to realize that writing code was never where the value was. The value is delivering great products to users and it requires much more than programming. For the last part of my argument, we need to look back in history. How many times has the introduction of tools that significantly increased developer productivity resulted in less jobs? What we've seen is actually the opposite, every advance has actually ended up empowering more people to enter the area and opened opportunities for companies to build better and more. Which leads to my last point, companies hire to outperform their competitors, not to execute a fixed backlog. Every developer now has access to LLMs, the field is leveled so justifying hiring freezes with the productivity gains of AI is just a temporary phenomenon before companies realize they are going to lose clients to other companies that are building faster. To conclude, no, I don't think the impact of LLMs on software developers jobs will be as big as it's being predicted. But I'm certain that writing code will become an ever less differential for the engineers that want to keep their jobs or that are trying to join the market. For these people I've written a book with insights on how you can improve your skills beyond writing code and become a more strategic engineer. Go check it out. I had an amazing chat with Mat Henshall about this topic which inspired me to write about it. Some of the ideas in this post were drafted from this conversation. Gergely Orosz and Addy Osmani wrote about this topic in

- or will AI replace software developers?

First, let's address the elephant in the room. Neither I nor anyone else knows yet if AGI is possible. If it is and someone actually manages to build it, it's pointless to even spend any time thinking about the question. It would have such a big impact in all areas of society that it becomes pointless to evaluate the matter from just the perspective of the IT market.

But yes, ours would probably be one the very first professions to vanish since the majority of our work happens in an environment that is purely digital and because software can be easily tested for validity (sometimes it can even mathematically proven to be right). I've seen many people a lot smarter than I am saying that perhaps AGI is possible just probably not with the LLM technology we are using today, so perhaps we still have a few years to go - I think Sabine Hossenfelder has some interesting takes on this, go check her videos on YouTube.

With that cleared we can focus on more practical and actionable scenarios.

If there's no AGI, then we first need to consider how far the LLM technology can go. Starting with hallucinations, although LLMs get better at this with every new iteration there will certainly be a limit to it. That's because hallucinations are intrinsic to this technology. The same thing that causes hallucinations is what made LLMs possible. The first consequence of this is that for any minimally relevant application we will need a person in the loop reviewing the work of the LLM. That is to reduce the chances it breaks applications leading to money loss or more severe consequences depending on the context.

The other part of it is that this kind of AI works best when prompted to do the things which it was extensively trained on. "Build an iOS app for an airline company", "write a merge sort algorithm in Python". For these well known use cases it can yield excellent results even if you provide very little context. But if you want anything more specific or less usual you are going to need to write a prompt that is much more detailed than that. And will probably need a few rounds of iteration before you have a reasonable result. This again means we need someone working with the LLM.

So while I do think we will always need developers reviewing the output of LLMs I also agree we will naturally need less and less of this as the technology evolves. In that sense we could say that there will be less jobs for developers and the ones available will be for senior positions.

That was the "programming" side of the debate. We know writing code is just a part of the job. There's another significant chunk of it that is related to things like aligning expectations with stakeholders, understanding context and debating technical and product solutions. I don't see a LLM emailing the CEO of a company requesting clarification on a decision, or participating in a meeting with unprompted comments and opinions - someone will certainly build this, but I think we will quickly figure it's best to turn it off.

The skills beyond writing code are a significant part of the job and they are not going away. We'll still need engineers balancing tradeoffs, negotiating priorities, managing risk and collaborating with users, and with people from other areas of the company. It's much less likely that LLMs will take on these things compared to their potential to write code. But it's also important to realize that writing code was never where the value was. The value is delivering great products to users and it requires much more than programming.

For the last part of my argument, we need to look back in history. How many times has the introduction of tools that significantly increased developer productivity resulted in less jobs? What we've seen is actually the opposite, every advance has actually ended up empowering more people to enter the area and opened opportunities for companies to build better and more. Which leads to my last point, companies hire to outperform their competitors, not to execute a fixed backlog. Every developer now has access to LLMs, the field is leveled so justifying hiring freezes with the productivity gains of AI is just a temporary phenomenon before companies realize they are going to lose clients to other companies that are building faster.

To conclude, no, I don't think the impact of LLMs on software developers jobs will be as big as it's being predicted. But I'm certain that writing code will become an ever less differential for the engineers that want to keep their jobs or that are trying to join the market. For these people I've written a book with insights on how you can improve your skills beyond writing code and become a more strategic engineer. Go check it out.

I had an amazing chat with Mat Henshall about this topic which inspired me to write about it. Some of the ideas in this post were drafted from this conversation. Gergely Orosz and Addy Osmani wrote about this topic in much more depth than I have and I highly recommend reading their post How AI-assisted coding will change software engineering: hard truths

What's Your Reaction?