The EU’s AI Act

Have you ever been in a group project where one person decided to take a shortcut, and suddenly, everyone ended up under The post The EU’s AI Act appeared first on Gigaom.

Have you ever been in a group project where one person decided to take a shortcut, and suddenly, everyone ended up under stricter rules? That’s essentially what the EU is saying to tech companies with the AI Act: “Because some of you couldn’t resist being creepy, we now have to regulate everything.” This legislation isn’t just a slap on the wrist—it’s a line in the sand for the future of ethical AI.

Here’s what went wrong, what the EU is doing about it, and how businesses can adapt without losing their edge.

When AI Went Too Far: The Stories We’d Like to Forget

Target and the Teen Pregnancy Reveal

One of the most infamous examples of AI gone wrong happened back in 2012, when Target used predictive analytics to market to pregnant customers. By analyzing shopping habits—think unscented lotion and prenatal vitamins—they managed to identify a teenage girl as pregnant before she told her family. Imagine her father’s reaction when baby coupons started arriving in the mail. It wasn’t just invasive; it was a wake-up call about how much data we hand over without realizing it. (Read more)

Clearview AI and the Privacy Problem

On the law enforcement front, tools like Clearview AI created a massive facial recognition database by scraping billions of images from the internet. Police departments used it to identify suspects, but it didn’t take long for privacy advocates to cry foul. People discovered their faces were part of this database without consent, and lawsuits followed. This wasn’t just a misstep—it was a full-blown controversy about surveillance overreach. (Learn more)

The EU’s AI Act: Laying Down the Law

The EU has had enough of these oversteps. Enter the AI Act: the first major legislation of its kind, categorizing AI systems into four risk levels:

- Minimal Risk: Chatbots that recommend books—low stakes, little oversight.

- Limited Risk: Systems like AI-powered spam filters, requiring transparency but little more.

- High Risk: This is where things get serious—AI used in hiring, law enforcement, or medical devices. These systems must meet stringent requirements for transparency, human oversight, and fairness.

- Unacceptable Risk: Think dystopian sci-fi—social scoring systems or manipulative algorithms that exploit vulnerabilities. These are outright banned.

For companies operating high-risk AI, the EU demands a new level of accountability. That means documenting how systems work, ensuring explainability, and submitting to audits. If you don’t comply, the fines are enormous—up to €35 million or 7% of global annual revenue, whichever is higher.

Why This Matters (and Why It’s Complicated)

The Act is about more than just fines. It’s the EU saying, “We want AI, but we want it to be trustworthy.” At its heart, this is a “don’t be evil” moment, but achieving that balance is tricky.

On one hand, the rules make sense. Who wouldn’t want guardrails around AI systems making decisions about hiring or healthcare? But on the other hand, compliance is costly, especially for smaller companies. Without careful implementation, these regulations could unintentionally stifle innovation, leaving only the big players standing.

Innovating Without Breaking the Rules

For companies, the EU’s AI Act is both a challenge and an opportunity. Yes, it’s more work, but leaning into these regulations now could position your business as a leader in ethical AI. Here’s how:

- Audit Your AI Systems: Start with a clear inventory. Which of your systems fall into the EU’s risk categories? If you don’t know, it’s time for a third-party assessment.

- Build Transparency Into Your Processes: Treat documentation and explainability as non-negotiables. Think of it as labeling every ingredient in your product—customers and regulators will thank you.

- Engage Early With Regulators: The rules aren’t static, and you have a voice. Collaborate with policymakers to shape guidelines that balance innovation and ethics.

- Invest in Ethics by Design: Make ethical considerations part of your development process from day one. Partner with ethicists and diverse stakeholders to identify potential issues early.

- Stay Dynamic: AI evolves fast, and so do regulations. Build flexibility into your systems so you can adapt without overhauling everything.

The Bottom Line

The EU’s AI Act isn’t about stifling progress; it’s about creating a framework for responsible innovation. It’s a reaction to the bad actors who’ve made AI feel invasive rather than empowering. By stepping up now—auditing systems, prioritizing transparency, and engaging with regulators—companies can turn this challenge into a competitive advantage.

The message from the EU is clear: if you want a seat at the table, you need to bring something trustworthy. This isn’t about “nice-to-have” compliance; it’s about building a future where AI works for people, not at their expense.

And if we do it right this time? Maybe we really can have nice things.

The post The EU’s AI Act appeared first on Gigaom.

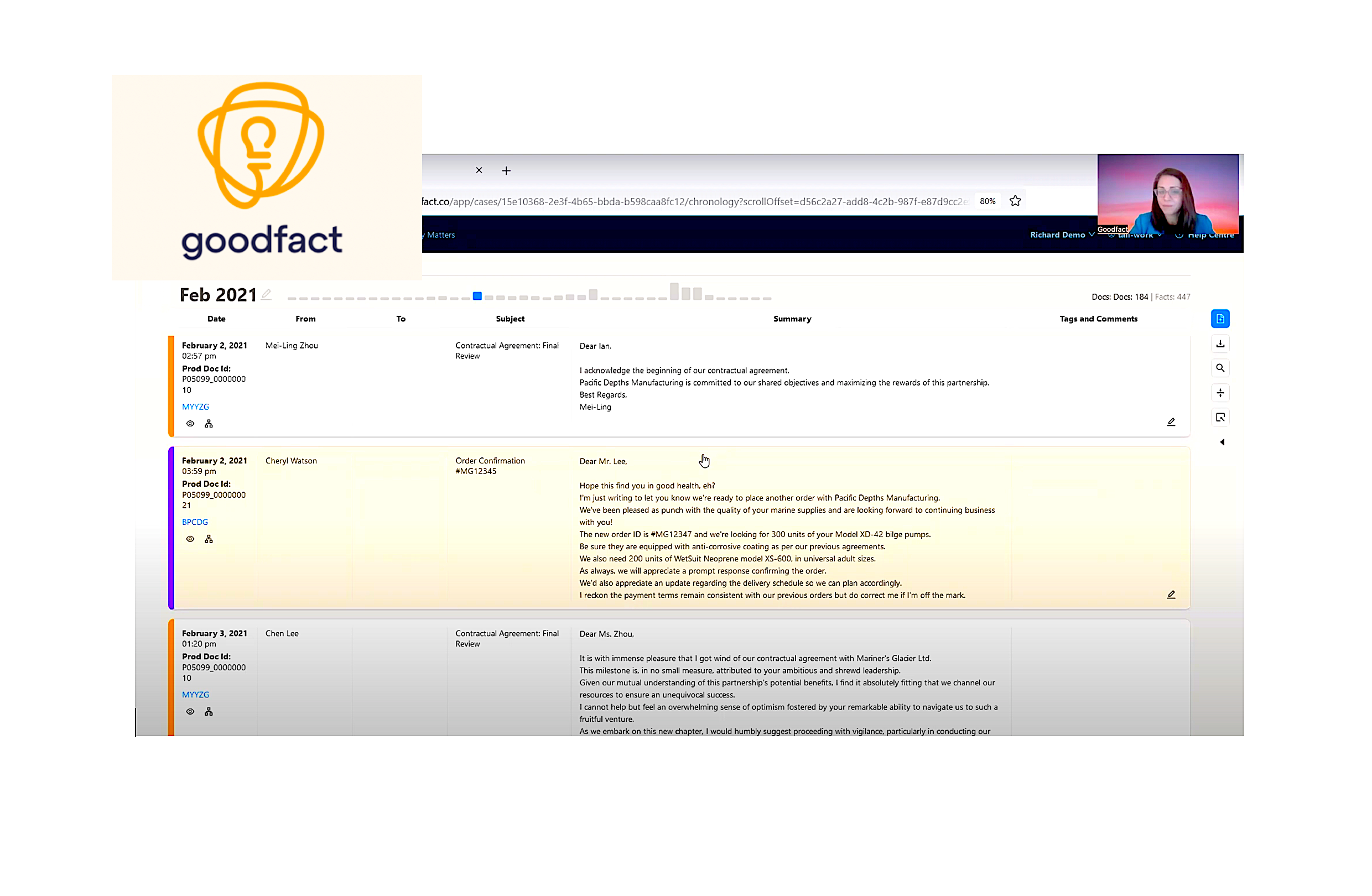

What's Your Reaction?

/cdn.vox-cdn.com/uploads/chorus_asset/file/25840970/videoframe_1799876.jpg)