Security researchers just found a major ChatGPT security flaw

A major security flaw in ChatGPT could allow bad actors to use the API to perform mass DDoS attacks. Researchers have reported the issue to … The post Security researchers just found a major ChatGPT security flaw appeared first on BGR.

A major security flaw in ChatGPT could allow bad actors to use the API to perform mass DDoS attacks. Researchers have reported the issue to OpenAI and have called upon the company to fix the underlying infrastructure to help cut off access to this potential cyberattack.

If you’ve never experienced a DDoS (Distributed Denial of Service) attack, then you’re probably not sure what to make of it. At the most basic level, these cyberattacks result from a threat actor sending an insanely large number of requests to a specific website or URL. When this happens, the website server typically becomes overwhelmed, resulting in it being shut down because of too much traffic.

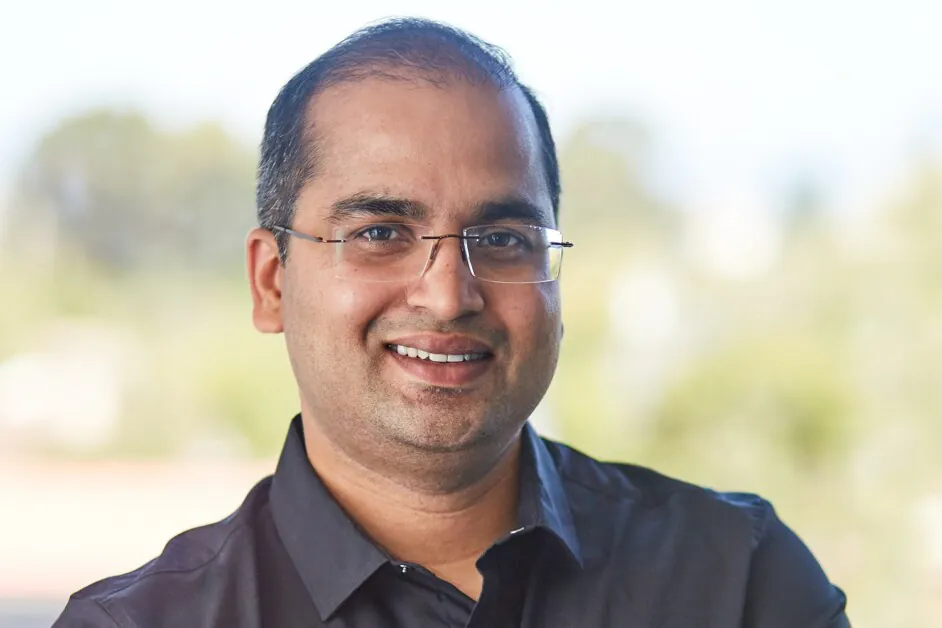

Researcher Benjamin Flesch outlined this new ChatGPT security flaw in a GitHub post. The flaw is in how the ChatGPT API handles HTTP POST requests to a specific endpoint. Because there is no limit to how many links the user can provide through the “URLs” parameter, bad actors can add the same URL as many times as they want, thus allowing the API to effectively DDoS a website or platform.

This is, obviously, a huge issue and one that will need to be fixed quickly to avoid any large-scale issues. Thankfully, the solution should be fairly simply, Flesch outlines. All OpenAI really needs to do here is implement a series of stringent limits on the number of URLs that a user can submit through the system. Additionally, the company should probably also add a system that checks for duplicate requests and limits them.

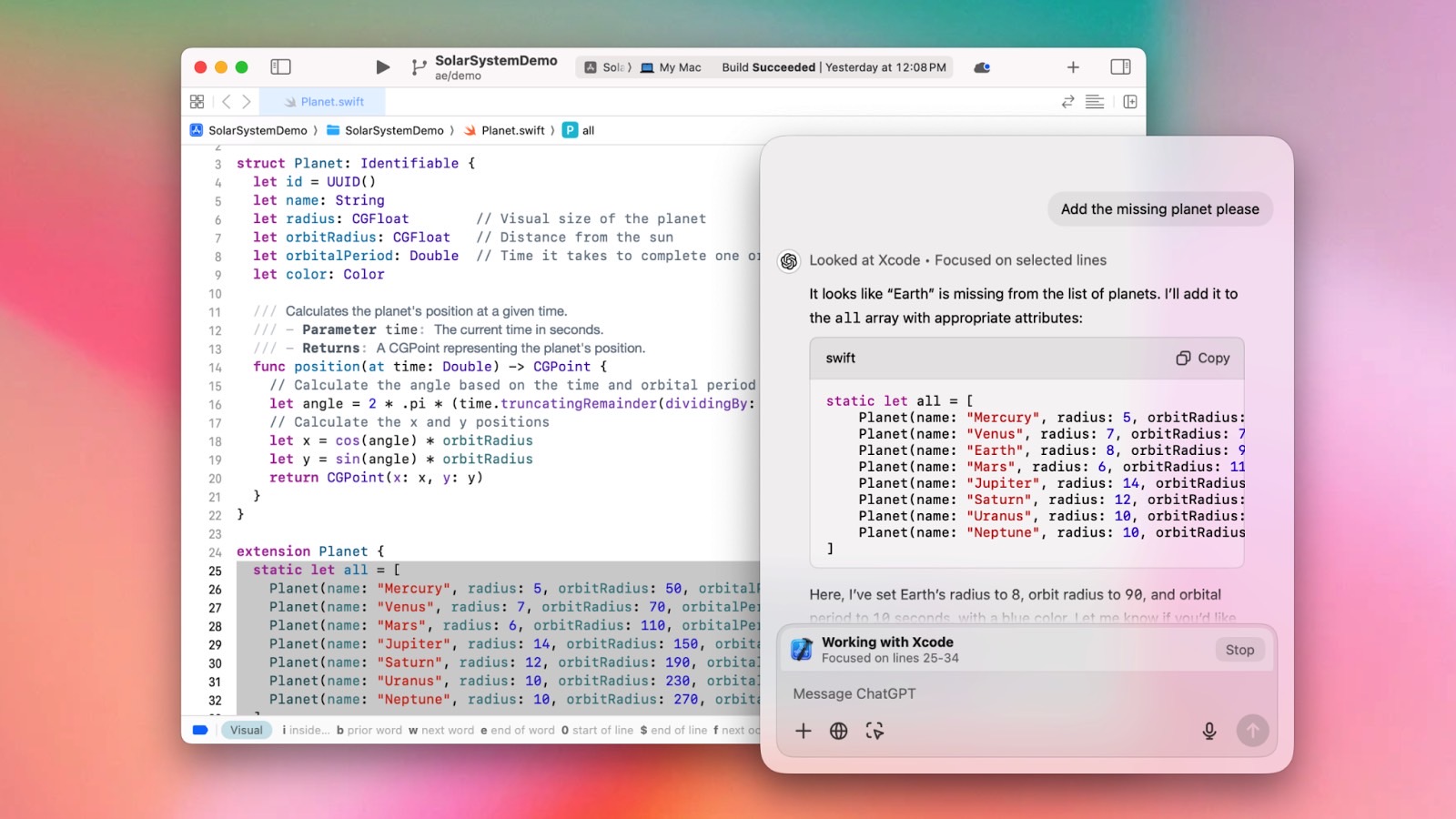

Unfortunately, this isn’t the first time people have found ways to abuse Generative AI like ChatGPT. It also likely won’t be the last, either. However, it does help that researchers like Flesch are outlining easy ways for OpenAI and others to solve these problems before they get too out of hand. Considering OpenAI’s work on ChatGPT Operators, it’s fully possible the company is already working on a fix for this ChatGPT security flaw.

Of course, we won’t know for sure until the company announces it. Or, perhaps it will solve the issue quietly without bringing much more attention to it. The latter is less likely, as tech companies like this tend to be very open with the resolution of big issues that security researchers have pointed out. In the meantime, we can only hope that nobody takes advantage of this flaw while it’s there.

The post Security researchers just found a major ChatGPT security flaw appeared first on BGR.

Today's Top Deals

- Today’s deals: $249 iPad 9th-Gen, $1,200 off AOC gaming laptop, Super Bowl TV deals, $79 Roku Ultra, more

- 57 best cheap Apple deals under $100

- Best Fire TV Stick deals for January 2025

- Amazon gift card deals, offers & coupons 2024: Get $315+ free

Security researchers just found a major ChatGPT security flaw originally appeared on BGR.com on Wed, 22 Jan 2025 at 15:52:00 EDT. Please see our terms for use of feeds.

What's Your Reaction?