DeepSeek and the race to surpass human intelligence

DeepSeek and the race to surpass human intelligence

Back in October, I met with a young German start-up CEO who had integrated the open-source approach by DeepSeek into his Mind-Verse platform and made it comply with German data privacy (DSGVO) standards. Since then, many rumors have been circulating that China has chosen a different architectural structure for its foundation model—one that relies not only on open source, but is also much more efficient, requiring neither the same level of training data nor the same compute resources.

When it comes to DeepSeek, this is not a singular “breakthrough moment.” Rather, AI development continues on an exponential trajectory: progress is becoming faster, its impact broader, and with increasing investment and more engineers involved, fundamental breakthroughs in engineering and architecture are just beginning. Contrary to some market spokespeople, investors, and even certain foundation model pioneers, this is not solely about throwing infinite compute at the problem; we are still far from understanding core aspects of reasoning, consciousness, and the “operating model” (or software layers) of the human mind.

Additionally DeepSeek is (was) not a government-sponsored initiative; supposedly, even the prime minister was surprised and visited Hangzhou to understand what was happening. Although Scale AI founder Alexander Wang claims that China already has a significant number of powerful H100 GPUs (about 50,000), yet—based on U.S. export laws—this fact is not publicly acknowledged. DeepSeek is reported to have only about 150 engineers, each earning in the range of $70–100k, which is eight to ten times lower than top engineering salaries in Silicon Valley.

So, regardless of whether they have powerful GPUs or whether $6 million or $150 million was invested, it is nowhere near the billions—or tens of billions—poured into other major AI competitors. This example shows that different engineering and architectural approaches do exist and may be waiting to be uncovered. Most likely, this is not the ultimate approach, but it also challenges the current VC narrative that “it’s all about compute and scale.” Moreover, the open-source mindset behind DeepSeek challenges the typical approach to LLMs and highlights both the advantages and the potential risks.

Sam Altman is rumored to be hosting a “behind-closed-doors” meeting with the Trump administration on January 30th, where he plans to present so-called “PhD-level” AI agents—or super agentic AI. How “super” this will be remains unclear, and it is unlikely there will be any public declaration of achieving AGI. Still, when Mark Zuckerberg suggests Meta will soon publish substantial progress, and Elon Musk hints at new breakthroughs with Groc, DeepSeek is just another “breakthrough” that illustrates how fast the market is moving.

Once agentic AIs come online, they introduce a structural shift: agentic AI is not about merely responding to a prompt, but about pursuing a goal. Through a network of super agents, massive amounts of data are gathered and analyzed, while real products and tasks are delivered autonomously. What is interesting about Sam Altman not making a public appearance and release, his meeting with the U.S. The government hints at potential risks and consequences.

We are at the Verge of Hyper-Efficiency and Hyper-Innovation

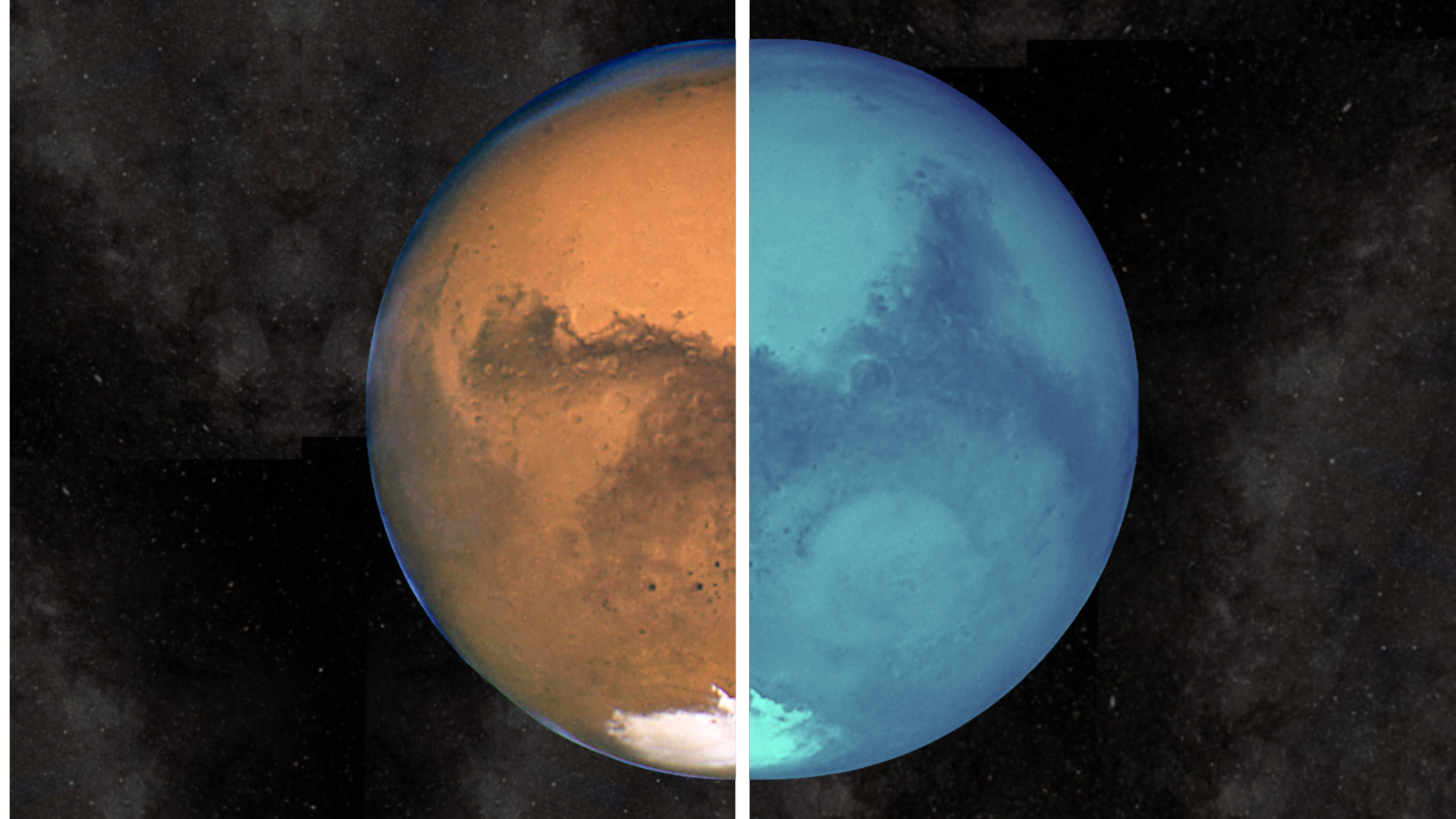

What we are seeing is the compound effect of investment and ever-growing teams working on these models, with few signs of a slowdown. Needless to say, any quantum breakthroughs would be the next frontier—essentially “AI on steroids”—where the magnitude of change could increase exponentially. On the positive side, this can unleash innovations in health and medicine like never before in human history.

In the near future, broader access to AI tools will probably benefit infrastructure providers and hyperscalers such as AWS. It is unclear if this will put NVIDIA at a disadvantage or actually benefit it: as “everyone” joins the AI race, there could be more demand for compute, not just from big U.S. tech players like OpenAI. Meanwhile, Anthropic and OpenAI run closed ecosystems, but DeepSeek’s public paper shares many of its core methods.

The greatest risk to the U.S. and its current AI dominance is that China does have talent and the strong work ethic to keep pushing forward. Trade sanctions won’t stop that. As more engineers come together and keep working, the odds of major breakthroughs increase.

The Battle of Distrust

Globally, the U.S. is losing trust. The “don’t trust China” narrative is fading in many parts of the world. While Donald Trump on the surface gains respect, global leaders are quietly looking for alternatives in the background to mitigate. Europe and other Asian nations don’t want to be “hostage” to U.S. technology and will open up to new options.

Technology doesn’t evolve overnight, and we’ve only seen the start of the breakthroughs to be announced by Groc, Meta, and OpenAI. Simultaneously, new capital will continue pouring in, and other regions will join the race, now that it’s clear money alone isn’t everything. The future might not necessarily be bad for NVIDIA, either, since data centers could appear everywhere, enabling a more global roll-out of AI and creating opportunities for many.

From Prompting to Action

There are still numerous smaller AI companies that have received massive funding purely on hope and hype. Yet new approaches to foundation models—via architectural and engineering innovation—can continue to drive progress. And once we “hack” biology or chemistry with AI, we may see entirely new levels of breakthroughs.

Looking toward the rest of 2025, we can expect more “super-agent” breakthroughs, as agentic AI and LQMs (Large Quantitative Models) push generative AI beyond fun language-based tools to genuine human worker replacements. Not only will financial modeling and analysis be optimized, but also execution—the entire cycle of booking, planning, and organizing—could shift to autonomous agents. Over time, these integrated, adaptive agents will replace more and more use cases where humans currently remain in the loop. This might also be one of the biggest threats to society: coping with extreme pressures on market economies under hyper-efficiency and hyper-innovation. In 2025, we are likely to see breakthroughs in education, science, health, consulting, and finance. With multiple compounding effects in play, we’ll likely experience hyper-efficiency and widespread growth.

However, the looming threats are real. Agentic, at-scale AI can still fall victim to hallucinations, and now anyone with a few million dollars can build their own model—potentially for malicious use. While a global, open approach to AI can be positive, many engineering and research challenges remain unsolved, leaving high risks. With the U.S. laser-focused on AI, the race to surpass human-level intelligence is on.

We list the best Large Language Models (LLMs) for coding.

This article was produced as part of TechRadarPro's Expert Insights channel where we feature the best and brightest minds in the technology industry today. The views expressed here are those of the author and are not necessarily those of TechRadarPro or Future plc. If you are interested in contributing find out more here: https://www.techradar.com/news/submit-your-story-to-techradar-pro