Beyond “Prompt and Pray”

TL;DR: Enterprise AI teams are discovering that purely agentic approaches (dynamically chaining LLM calls) don’t deliver the reliability needed for production systems. The prompt-and-pray model—where business logic lives entirely in prompts—creates systems that are unreliable, inefficient, and impossible to maintain at scale. A shift toward structured automation, which separates conversational ability from business logic execution, […]

TL;DR:

- Enterprise AI teams are discovering that purely agentic approaches (dynamically chaining LLM calls) don’t deliver the reliability needed for production systems.

- The prompt-and-pray model—where business logic lives entirely in prompts—creates systems that are unreliable, inefficient, and impossible to maintain at scale.

- A shift toward structured automation, which separates conversational ability from business logic execution, is needed for enterprise-grade reliability.

- This approach delivers substantial benefits: consistent execution, lower costs, better security, and systems that can be maintained like traditional software.

Picture this: The current state of conversational AI is like a scene from Hieronymus Bosch’s Garden of Earthly Delights. At first glance, it’s mesmerizing—a paradise of potential. AI systems promise seamless conversations, intelligent agents, and effortless integration. But look closely and chaos emerges: a false paradise all along.

Your company’s AI assistant confidently tells a customer it’s processed their urgent withdrawal request—except it hasn’t, because it misinterpreted the API documentation. Or perhaps it cheerfully informs your CEO it’s archived those sensitive board documents—into entirely the wrong folder. These aren’t hypothetical scenarios; they’re the daily reality for organizations betting their operations on the prompt-and-pray approach to AI implementation.

The Evolution of Expectations

For years, the AI world was driven by scaling laws: the empirical observation that larger models and bigger datasets led to proportionally better performance. This fueled a belief that simply making models bigger would solve deeper issues like accuracy, understanding, and reasoning. However, there’s growing consensus that the era of scaling laws is coming to an end. Incremental gains are harder to achieve, and organizations betting on ever-more-powerful LLMs are beginning to see diminishing returns.

Against this backdrop, expectations for conversational AI have skyrocketed. Remember the simple chatbots of yesterday? They handled basic FAQs with preprogrammed responses. Today’s enterprises want AI systems that can:

- Navigate complex workflows across multiple departments

- Interface with hundreds of internal APIs and services

- Handle sensitive operations with security and compliance in mind

- Scale reliably across thousands of users and millions of interactions

However, it’s important to carve out what these systems are—and are not. When we talk about conversational AI, we’re referring to systems designed to have a conversation, orchestrate workflows, and make decisions in real time. These are systems that engage in conversations and integrate with APIs but don’t create stand-alone content like emails, presentations, or documents. Use cases like “write this email for me” and “create a deck for me” fall into content generation, which lies outside this scope. This distinction is critical because the challenges and solutions for conversational AI are unique to systems that operate in an interactive, real-time environment.

We’ve been told 2025 will be the Year of Agents, but at the same time there’s a growing consensus from the likes of Anthropic, Hugging Face, and other leading voices that complex workflows require more control than simply trusting an LLM to figure everything out.

The Prompt-and-Pray Problem

The standard playbook for many conversational AI implementations today looks something like this:

- Collect relevant context and documentation

- Craft a prompt explaining the task

- Ask the LLM to generate a plan or response

- Trust that it works as intended

This approach—which we call prompt and pray—seems attractive at first. It’s quick to implement and demos well. But it harbors serious issues that become apparent at scale:

Unreliability

Every interaction becomes a new opportunity for error. The same query can yield different results depending on how the model interprets the context that day. When dealing with enterprise workflows, this variability is unacceptable.

To get a sense of the unreliable nature of the prompt-and-pray approach, consider that Hugging Face reports the state of the art on function calling is well under 90% accurate. 90% accuracy for software will often be a deal-breaker, but the promise of agents rests on the ability to chain them together: even five in a row will fail over 40% of the time!

Inefficiency

Dynamic generation of responses and plans is computationally expensive. Each interaction requires multiple API calls, token processing, and runtime decision-making. This translates to higher costs and slower response times.

Complexity

Debugging these systems is a nightmare. When an LLM doesn’t do what you want, your main recourse is to change the input. But the only way to know the impact that your change will have is trial and error. When your application comprises many steps, each of which uses the output from one LLM call as input for another, you are left sifting through chains of LLM reasoning, trying to understand why the model made certain decisions. Development velocity grinds to a halt.

Security

Letting LLMs make runtime decisions about business logic creates unnecessary risk. The OWASP AI Security & Privacy Guide specifically warns against “Excessive Agency”—giving AI systems too much autonomous decision-making power. Yet many current implementations do exactly that, exposing organizations to potential breaches and unintended outcomes.

A Better Way Forward: Structured Automation

The alternative isn’t to abandon AI’s capabilities but to harness them more intelligently through structured automation. Structured automation is a development approach that separates conversational AI’s natural language understanding from deterministic workflow execution. This means using LLMs to interpret user input and clarify what they want, while relying on predefined, testable workflows for critical operations. By separating these concerns, structured automation ensures that AI-powered systems are reliable, efficient, and maintainable.

This approach separates concerns that are often muddled in prompt-and-pray systems:

- Understanding what the user wants: Use LLMs for their strength in understanding, manipulating, and producing natural language

- Business logic execution: Rely on predefined, tested workflows for critical operations

- State management: Maintain clear control over system state and transitions

The key principle is simple: Generate once, run reliably forever. Instead of having LLMs make runtime decisions about business logic, use them to help create robust, reusable workflows that can be tested, versioned, and maintained like traditional software.

By keeping the business logic separate from conversational capabilities, structured automation ensures that systems remain reliable, efficient, and secure. This approach also reinforces the boundary between generative conversational tasks (where the LLM thrives) and operational decision-making (which is best handled by deterministic, software-like processes).

By “predefined, tested workflows,” we mean creating workflows during the design phase, using AI to assist with ideas and patterns. These workflows are then implemented as traditional software, which can be tested, versioned, and maintained. This approach is well understood in software engineering and contrasts sharply with building agents that rely on runtime decisions—an inherently less reliable and harder-to-maintain model.

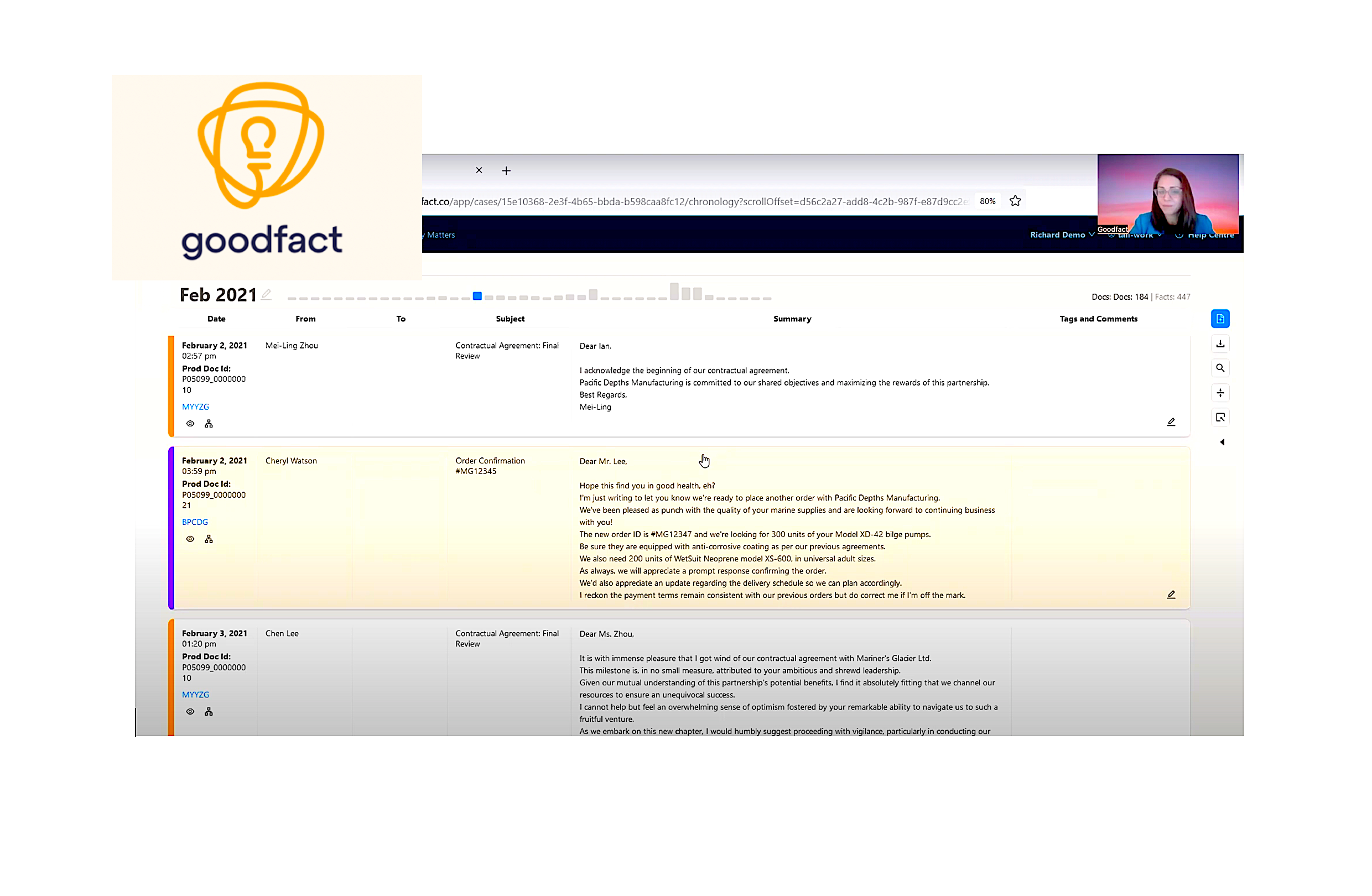

Alex Strick van Linschoten and the team at ZenML have recently compiled a database of 400+ (and growing!) LLM deployments in the enterprise. Not surprisingly, they discovered that structured automation delivers significantly more value across the board than the prompt-and-pray approach:

There’s a striking disconnect between the promise of fully autonomous agents and their presence in customer-facing deployments. This gap isn’t surprising when we examine the complexities involved. The reality is that successful deployments tend to favor a more constrained approach, and the reasons are illuminating…

Take Lindy.ai’s journey: they began with open-ended prompts, dreaming of fully autonomous agents. However, they discovered that reliability improved dramatically when they shifted to structured workflows. Similarly, Rexera found success by implementing decision trees for quality control, effectively constraining their agents’ decision space to improve predictability and reliability.

The prompt-and-pray approach is tempting because it demos well and feels fast. But beneath the surface, it’s a patchwork of brittle improvisation and runaway costs. The antidote isn’t abandoning the promise of AI—it’s designing systems with a clear separation of concerns: conversational fluency handled by LLMs, business logic powered by structured workflows.

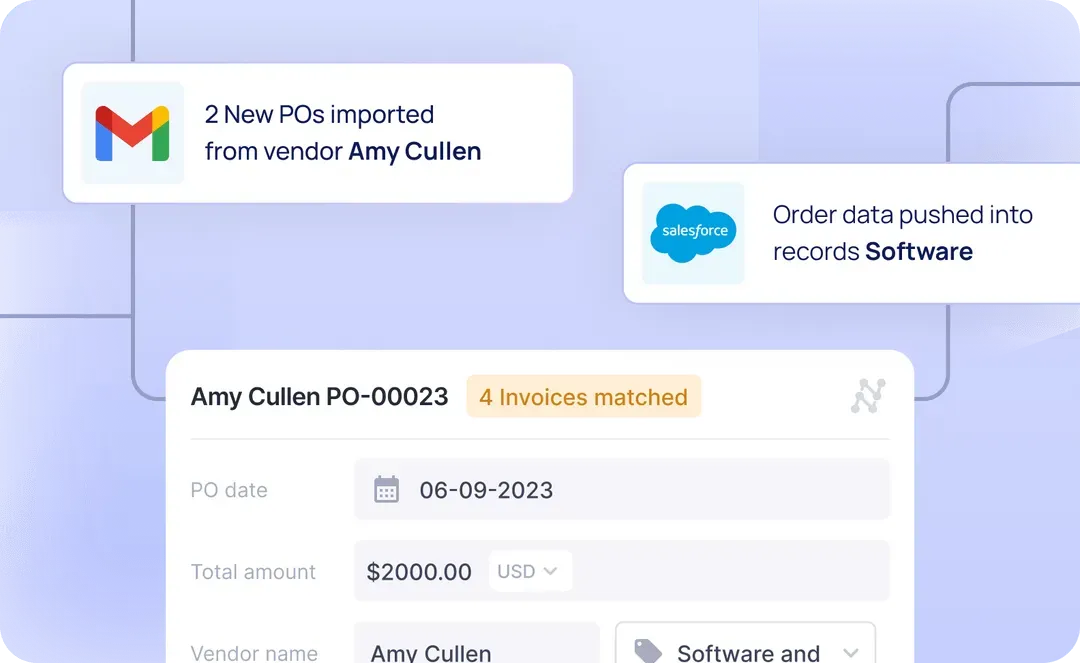

What Does Structured Automation Look Like in Practice?

Consider a typical customer support scenario: a customer messages your AI assistant saying, “Hey, you messed up my order!”

- The LLM interprets the user’s message, asking clarifying questions like, “What’s missing from your order?”

- Having received the relevant details, the structured workflow queries backend data to determine the issue: Were items shipped separately? Are they still in transit? Were they out of stock?

- Based on this information, the structured workflow determines the appropriate options: a refund, reshipment, or another resolution. If needed, it requests more information from the customer, leveraging the LLM to handle the conversation.

Here, the LLM excels at navigating the complexities of human language and dialogue. But the critical business logic—like querying databases, checking stock, and determining resolutions—lives in predefined workflows.

This approach ensures:

- Reliability: The same logic applies consistently across all users.

- Security: Sensitive operations are tightly controlled.

- Efficiency: Developers can test, version, and improve workflows like traditional software.

Structured automation bridges the best of both worlds: conversational fluency powered by LLMs and dependable execution handled by workflows.

What About the Long Tail?

A common objection to structured automation is that it doesn’t scale to handle the “long tail” of tasks—those rare, unpredictable scenarios that seem impossible to predefine. But the truth is that structured automation simplifies edge-case management by making LLM improvisation safe and measurable.

Here’s how it works: Low-risk or rare tasks can be handled flexibly by LLMs in the short term. Each interaction is logged, patterns are analyzed, and workflows are created for tasks that become frequent or critical. Today’s LLMs are very capable of generating the code for a structured workflow given examples of successful conversations. This iterative approach turns the long tail into a manageable pipeline of new functionality, with the knowledge that by promoting these tasks into structured workflows we gain reliability, explainability, and efficiency.

From Runtime to Design Time

Let’s revisit the earlier example: a customer messages your AI assistant saying, “Hey, you messed up my order!”

The Prompt-and-Pray Approach

- Dynamically interprets messages and generates responses

- Makes real-time API calls to execute operations

- Relies on improvisation to resolve issues

This approach leads to unpredictable results, security risks, and high debugging costs.

A Structured Automation Approach

- Uses LLMs to interpret user input and gather details

- Executes critical tasks through tested, versioned workflows

- Relies on structured systems for consistent outcomes

- Predictable execution: Workflows behave consistently every time

- Lower costs: Reduced token usage and processing overhead

- Better security: Clear boundaries around sensitive operations

- Easier maintenance: Standard software development practices apply

The Role of Humans

For edge cases, the system escalates to a human with full context, ensuring sensitive scenarios are handled with care. This human-in-the-loop model combines AI efficiency with human oversight for a reliable and collaborative experience.

This methodology can be extended beyond expense reports to other domains like customer support, IT ticketing, and internal HR workflows—anywhere conversational AI needs to reliably integrate with backend systems.

Building for Scale

The future of enterprise conversational AI isn’t in giving models more runtime autonomy—it’s in using their capabilities more intelligently to create reliable, maintainable systems. This means:

- Treating AI-powered systems with the same engineering rigor as traditional software

- Using LLMs as tools for generation and understanding, not as runtime decision engines

- Building systems that can be understood, maintained, and improved by normal engineering teams

The question isn’t how to automate everything at once but how to do so in a way that scales, works reliably, and delivers consistent value.

Taking Action

For technical leaders and decision makers, the path forward is clear:

- Audit current implementations:

- Identify areas where prompt-and-pray approaches create risk

- Measure the cost and reliability impact of current systems

- Look for opportunities to implement structured automation

2. Start small but think big:

- Begin with pilot projects in well-understood domains

- Build reusable components and patterns

- Document successes and lessons learned

3. Invest in the right tools and practices:

- Look for platforms that support structured automation

- Build expertise in both LLM capabilities and traditional software engineering

- Develop clear guidelines for when to use different approaches

The era of prompt and pray may just be beginning, but you can do better. As enterprises mature in their AI implementations, the focus must shift from impressive demos to reliable, scalable systems. Structured automation provides the framework for this transition, combining the power of AI with the reliability of traditional software engineering.

The future of enterprise AI isn’t just about having the latest models—it’s about using them wisely to build systems that work consistently, scale effectively, and deliver real value. The time to make this transition is now.

What's Your Reaction?