Run PySpark Local Python Windows Notebook

Introduction PySpark is the Python API for Apache Spark, an open-source distributed computing system that enables fast, scalable data processing. PySpark allows Python developers to leverage the powerful capabilities of Spark for big data analytics, machine learning, and data engineering tasks without needing to delve into the complexities of Java or Scala. With PySpark , users can process large datasets across clusters, perform distributed data transformations, and run machine learning algorithms. It integrates seamlessly with popular data processing frameworks like Hadoop and supports multiple data formats, making it a versatile tool in data science and analytics. This introduction provides an overview of PySpark's configuration, help you easy to set up and use in local environment computer. Installation Install Python at : https://www.python.org/downloads/ Install Java First at all you need to download latest version of java at : https://jdk.java.net. I'm using java 23 for this post. Install PySpark First at all, you also need to download Apache Spark from : I'm using https://www.apache.org/dyn/closer.lua/spark/spark-3.5.4/spark-3.5.4-bin-hadoop3.tgz to make a tutorial for this post. Configuration Python Java import os os.environ["JAVA_HOME"] = fr"D:\Soft\JAVA\jdk-23.0.1" os.environ["PATH"] = os.environ["JAVA_HOME"] + "/bin;" + os.environ["PATH"] PySpark import os os.environ["SPARK_HOME"] = fr"D:\Soft\pyspark\spark-3.5.4-bin-hadoop3" os.environ["PATH"] = os.environ["SPARK_HOME"] + "/bin;" + os.environ["PATH"] After done, you can try check Pyspark at command line : Try Example with Pyspark Notebook. import numpy as np import pandas as pd spark = SparkSession.builder \ .appName("Debugging Example") \ .master("local[*]") \ .config("spark.eventLog.enabled", "true") \ .config("spark.sql.shuffle.partitions", "1") \ .getOrCreate() spark.sparkContext.setLogLevel("DEBUG") # Enable Arrow-based columnar data transfers spark.conf.set("spark.sql.execution.arrow.enabled", "true") # Generate a pandas DataFrame pdf = pd.DataFrame(np.random.rand(100, 3)) # Create a Spark DataFrame from a pandas DataFrame using Arrow df = spark.createDataFrame(pdf) # rename columns df = df.toDF("a", "b", "c") df Use df.show(5) to see test output with pyspark. Let's try some machine learning data example : import requests # URL for the dataset url = "https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data" # Download the dataset and save it locally response = requests.get(url) with open("iris.data", "wb") as file: file.write(response.content) from pyspark.sql import SparkSession # Create a SparkSession spark = SparkSession.builder \ .appName("Iris Data Analysis") \ .master("local[*]")\ .getOrCreate() # Path to the locally downloaded Iris dataset iris_data_path = "iris.data" # Define the schema for the data columns = ["sepal_length", "sepal_width", "petal_length", "petal_width", "species"] # Load the data into a DataFrame df = spark.read.csv(iris_data_path, header=False, inferSchema=True) # Set column names df = df.toDF(*columns) # Show the first few rows of the DataFrame df.show() # Stop the SparkSession when done spark.stop() It working! Cheers ! Reference https://spark.apache.org/docs/latest/api/python/getting_started/quickstart_df.html https://www.python.org/downloads/ https://stackoverflow.com/questions/77295900/display-spark-dataframe-in-visual-studio-code https://jdk.java.net/ https://pypi.org/project/pyspark/

Introduction

PySpark is the Python API for Apache Spark, an open-source distributed computing system that enables fast, scalable data processing. PySpark allows Python developers to leverage the powerful capabilities of Spark for big data analytics, machine learning, and data engineering tasks without needing to delve into the complexities of Java or Scala.

With PySpark , users can process large datasets across clusters, perform distributed data transformations, and run machine learning algorithms. It integrates seamlessly with popular data processing frameworks like Hadoop and supports multiple data formats, making it a versatile tool in data science and analytics.

This introduction provides an overview of PySpark's configuration, help you easy to set up and use in local environment computer.

Installation

- Install Python at : https://www.python.org/downloads/

- Install Java

First at all you need to download latest version of java at : https://jdk.java.net. I'm using java

23for this post. - Install PySpark

First at all, you also need to download Apache Spark from :

I'm using https://www.apache.org/dyn/closer.lua/spark/spark-3.5.4/spark-3.5.4-bin-hadoop3.tgz to make a tutorial for this post.

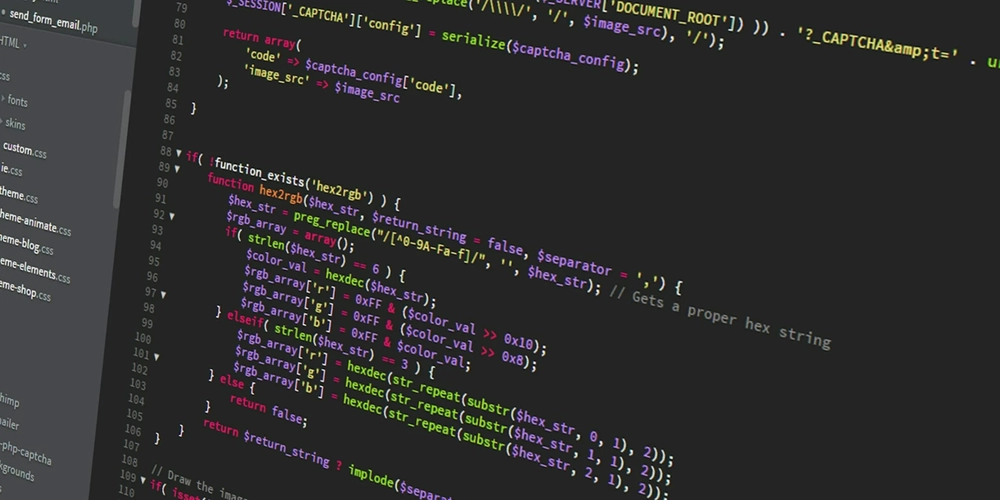

Configuration Python

- Java

import os

os.environ["JAVA_HOME"] = fr"D:\Soft\JAVA\jdk-23.0.1"

os.environ["PATH"] = os.environ["JAVA_HOME"] + "/bin;" + os.environ["PATH"]

- PySpark

import os

os.environ["SPARK_HOME"] = fr"D:\Soft\pyspark\spark-3.5.4-bin-hadoop3"

os.environ["PATH"] = os.environ["SPARK_HOME"] + "/bin;" + os.environ["PATH"]

After done, you can try check Pyspark at command line :

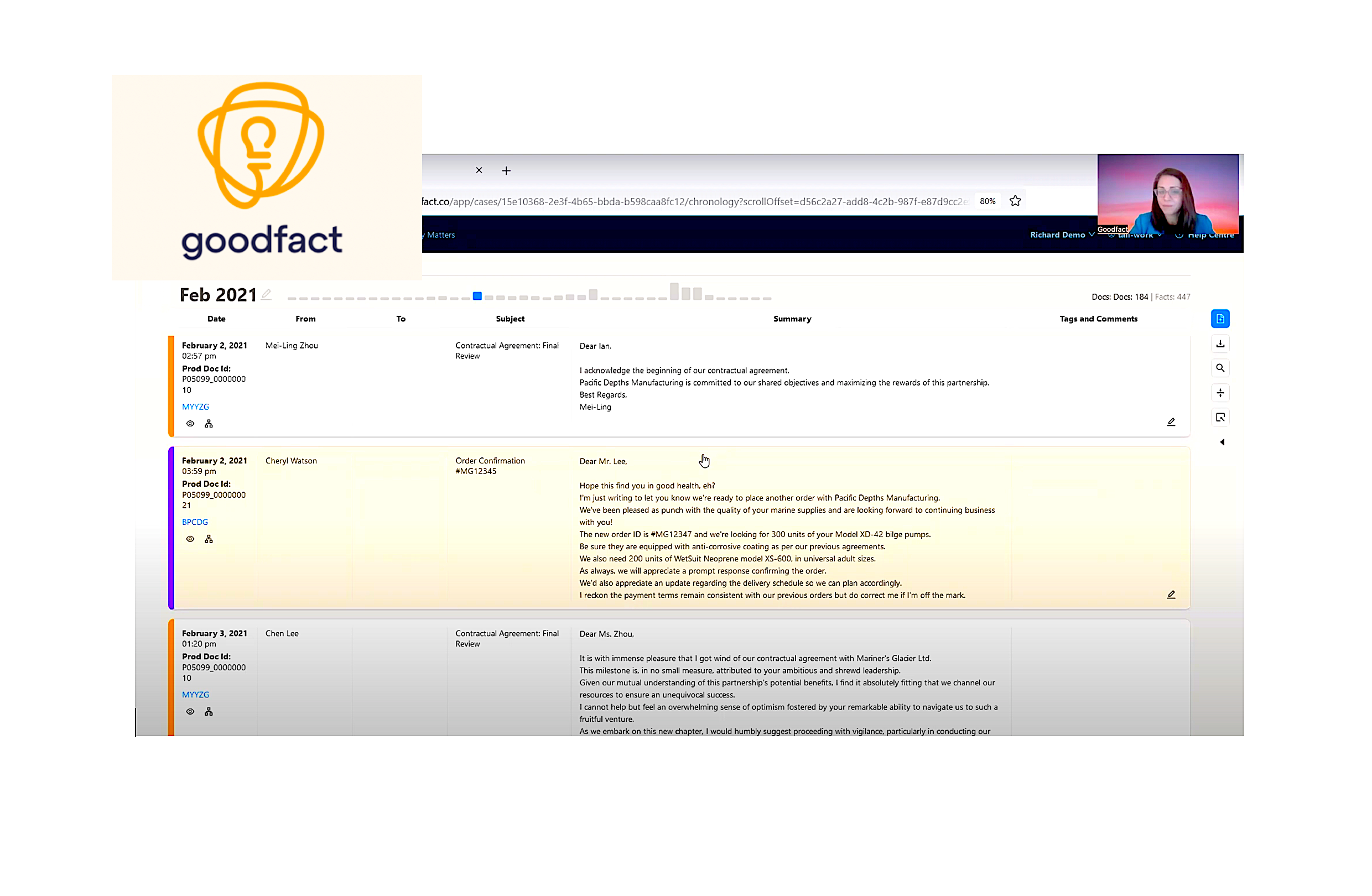

Try Example with Pyspark Notebook.

import numpy as np

import pandas as pd

spark = SparkSession.builder \

.appName("Debugging Example") \

.master("local[*]") \

.config("spark.eventLog.enabled", "true") \

.config("spark.sql.shuffle.partitions", "1") \

.getOrCreate()

spark.sparkContext.setLogLevel("DEBUG")

# Enable Arrow-based columnar data transfers

spark.conf.set("spark.sql.execution.arrow.enabled", "true")

# Generate a pandas DataFrame

pdf = pd.DataFrame(np.random.rand(100, 3))

# Create a Spark DataFrame from a pandas DataFrame using Arrow

df = spark.createDataFrame(pdf)

# rename columns

df = df.toDF("a", "b", "c")

df

Use df.show(5) to see test output with pyspark.

Let's try some machine learning data example :

import requests

# URL for the dataset

url = "https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data"

# Download the dataset and save it locally

response = requests.get(url)

with open("iris.data", "wb") as file:

file.write(response.content)

from pyspark.sql import SparkSession

# Create a SparkSession

spark = SparkSession.builder \

.appName("Iris Data Analysis") \

.master("local[*]")\

.getOrCreate()

# Path to the locally downloaded Iris dataset

iris_data_path = "iris.data"

# Define the schema for the data

columns = ["sepal_length", "sepal_width", "petal_length", "petal_width", "species"]

# Load the data into a DataFrame

df = spark.read.csv(iris_data_path, header=False, inferSchema=True)

# Set column names

df = df.toDF(*columns)

# Show the first few rows of the DataFrame

df.show()

# Stop the SparkSession when done

spark.stop()

It working! Cheers !

Reference

What's Your Reaction?

![[FREE EBOOKS] Hacking and Securityy, The Kubernetes Book & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![AI in elementary and middle schools [NAESP]](https://dangerouslyirrelevant.org/wp-content/uploads/2025/01/NAESP-Logo-Square-1.jpg)