OpenAI’s New Research-Focused AI Agent and Altman’s Open Source Revelation

With DeepSeek ripping the AI playbook last week, it has prompted the entire industry to sit up and take notice. Some of the tech giants have been forced to rethink their game as the old tactics might cut it in this rapidly shifting landscape. OpenAI, a key player in the AI arena, reacted to DeepSeek’s challenge by pledging to “deliver much better models” and accelerate product releases. Just days after that announcement, the San Francisco-based company released a new AI agent designed to conduct in-depth and complex research. The aptly named Deep Research can conduct multi-step research on the internet for various topics including science, finance, engineering, and policy. It is built on a special version of the recently announced o3 reasoning AI model. According to OpenAI, Deep Research is useful for a wide range of applications including everything from providing intensive knowledge for researchers to assisting shoppers looking for hyper-personalized recommendations. OpenAI claims in a blog post that Deep Research can accomplish “in tens of minutes what would take a human many hours.” “Deep Research independently discovers, reasons about, and consolidates insights from across the web,” shared OpenAI. To accomplish this, it was trained on real-world tasks requiring browser and Python tool use, using the same reinforcement learning methods behind OpenAI o1, our first reasoning model.” “While o1 demonstrates impressive capabilities in coding, math, and other technical domains, many real-world challenges demand extensive context and information gathering from diverse online sources. Deep research builds on these reasoning capabilities to bridge that gap, allowing it to take on the types of problems people face in work and everyday life.” Deep Research is available for OpenAI Pro users today with a maximum limit of 100 queries per month. Plus Team users will be granted access next, with Enterprise users following afterward. OpenAI shared that all paid users will have significantly higher rate limits when the company releases a more cost-effective version of Deep Research in the near future. Currently, the agent is available exclusively via the web, with plans to integrate mobile and desktop applications later this month. In terms of performance benchmarks, OpenAI shared that Deep Research achieved a new high of 26.6% accuracy on Humanity’s Last Exam - a recently related AI evaluation tool based on expert-level questions. So how does Deep Research compare with DeepSeek’s R1? It appears that the two are not direct competitors in function. Deep Research is more suited for structured research, citation management, and automated reasoning. While the R1 is geared more toward solving complex mathematical and computational problems. Nonetheless, comparisons between the two are inevitable, especially considering the similarity in their names and the proximity of their launch dates. Sam Altman kicked off the year with a bold claim that OpenAI is now confident in its understanding of how to create AGI. The release of Deep Research aligns with the company's broader goal of developing AGI. OpenAI’s Deep Research has the potential to drastically reduce the need for time and effort spent on online research. Using simple queries, users can have a personal research analyst at their fingertips. However, it’s something easier said than done. AI hallucination remains a persistent challenge in GenAI models, and Deep Research is no exception. OpenAI admits that based on its internal evaluations Deep Research can sometimes hallucinate facts or make incorrect responses. This could be a major concern when conducting in-depth research. OpenAI shared that the Deep Research could “struggle with distinguishing authoritative information from rumors, and currently shows weakness in confidence calibration, often failing to convey uncertainty accurately.” However, it expects the issues to improve with more usage and time. DeepSeek R1’s release reignited a debate surrounding the role of open-source code in the AI world. Despite its open-source roots and its name, OpenAI has shifted to a closed-source development approach. Altman admits that OpenAI has been “on the wrong side of history” and now needs to “figure out a different open-source strategy,” he wrote on a Reddit AMA last week. However, he was quick to mention that not everyone at OpenAI shares this perspective and it's not something of the highest priority. According to Kevin Weil, OpenAI’s chief product officer, the company is considered open-sourcing some of its older and less state-of-the-art models. It’s also considering revealing more under the hood. He acknowledged the challenge of balancing transparency with competitive risks but noted that OpenAI is actively exploring ways to enhance disclosure without compromising its competitive edge. OpenAI may be considering a more open-source approach, but it may not happen anytime soon. The company has accused DeepSeek of

With DeepSeek ripping the AI playbook last week, it has prompted the entire industry to sit up and take notice. Some of the tech giants have been forced to rethink their game as the old tactics might cut it in this rapidly shifting landscape.

OpenAI, a key player in the AI arena, reacted to DeepSeek’s challenge by pledging to “deliver much better models” and accelerate product releases. Just days after that announcement, the San Francisco-based company released a new AI agent designed to conduct in-depth and complex research.

The aptly named Deep Research can conduct multi-step research on the internet for various topics including science, finance, engineering, and policy. It is built on a special version of the recently announced o3 reasoning AI model.

According to OpenAI, Deep Research is useful for a wide range of applications including everything from providing intensive knowledge for researchers to assisting shoppers looking for hyper-personalized recommendations. OpenAI claims in a blog post that Deep Research can accomplish “in tens of minutes what would take a human many hours.”

“Deep Research independently discovers, reasons about, and consolidates insights from across the web,” shared OpenAI. To accomplish this, it was trained on real-world tasks requiring browser and Python tool use, using the same reinforcement learning methods behind OpenAI o1, our first reasoning model.”

“While o1 demonstrates impressive capabilities in coding, math, and other technical domains, many real-world challenges demand extensive context and information gathering from diverse online sources. Deep research builds on these reasoning capabilities to bridge that gap, allowing it to take on the types of problems people face in work and everyday life.”

Deep Research is available for OpenAI Pro users today with a maximum limit of 100 queries per month. Plus Team users will be granted access next, with Enterprise users following afterward. OpenAI shared that all paid users will have significantly higher rate limits when the company releases a more cost-effective version of Deep Research in the near future.

Currently, the agent is available exclusively via the web, with plans to integrate mobile and desktop applications later this month.

In terms of performance benchmarks, OpenAI shared that Deep Research achieved a new high of 26.6% accuracy on Humanity’s Last Exam - a recently related AI evaluation tool based on expert-level questions.

So how does Deep Research compare with DeepSeek’s R1? It appears that the two are not direct competitors in function. Deep Research is more suited for structured research, citation management, and automated reasoning. While the R1 is geared more toward solving complex mathematical and computational problems. Nonetheless, comparisons between the two are inevitable, especially considering the similarity in their names and the proximity of their launch dates.

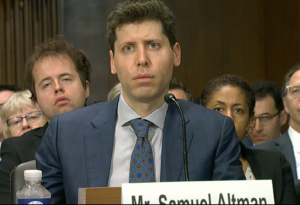

Sam Altman kicked off the year with a bold claim that OpenAI is now confident in its understanding of how to create AGI. The release of Deep Research aligns with the company's broader goal of developing AGI.

OpenAI’s Deep Research has the potential to drastically reduce the need for time and effort spent on online research. Using simple queries, users can have a personal research analyst at their fingertips. However, it’s something easier said than done.

AI hallucination remains a persistent challenge in GenAI models, and Deep Research is no exception. OpenAI admits that based on its internal evaluations Deep Research can sometimes hallucinate facts or make incorrect responses. This could be a major concern when conducting in-depth research.

OpenAI shared that the Deep Research could “struggle with distinguishing authoritative information from rumors, and currently shows weakness in confidence calibration, often failing to convey uncertainty accurately.” However, it expects the issues to improve with more usage and time.

DeepSeek R1’s release reignited a debate surrounding the role of open-source code in the AI world. Despite its open-source roots and its name, OpenAI has shifted to a closed-source development approach.

Altman admits that OpenAI has been “on the wrong side of history” and now needs to “figure out a different open-source strategy,” he wrote on a Reddit AMA last week. However, he was quick to mention that not everyone at OpenAI shares this perspective and it's not something of the highest priority.

According to Kevin Weil, OpenAI’s chief product officer, the company is considered open-sourcing some of its older and less state-of-the-art models. It’s also considering revealing more under the hood. He acknowledged the challenge of balancing transparency with competitive risks but noted that OpenAI is actively exploring ways to enhance disclosure without compromising its competitive edge.

According to Kevin Weil, OpenAI’s chief product officer, the company is considered open-sourcing some of its older and less state-of-the-art models. It’s also considering revealing more under the hood. He acknowledged the challenge of balancing transparency with competitive risks but noted that OpenAI is actively exploring ways to enhance disclosure without compromising its competitive edge.

OpenAI may be considering a more open-source approach, but it may not happen anytime soon. The company has accused DeepSeek of unlawful use of its AI models, however, Altman says the company has “no plans to sue DeepSeek. Interestingly, OpenAI itself is facing more than a dozen lawsuits for illegally using copyrighted internet data to train its models. OpenAI appears concerned about the possibility of their models being replicated, and open-sourcing could indeed make replication easier. It remains to be seen whether OpenAI is willing to take this risk.