More Details On Why DeepSeek is a Big Deal

The DeepSeek large language models (LLM) have been making headlines lately, and for more than one reason. IEEE Spectrum has an article that sums everything up very nicely. We shared …read more

The DeepSeek large language models (LLM) have been making headlines lately, and for more than one reason. IEEE Spectrum has an article that sums everything up very nicely.

We shared the way DeepSeek made a splash when it came onto the AI scene not long ago, and this is a good opportunity to go into a few more details of why this has been such a big deal.

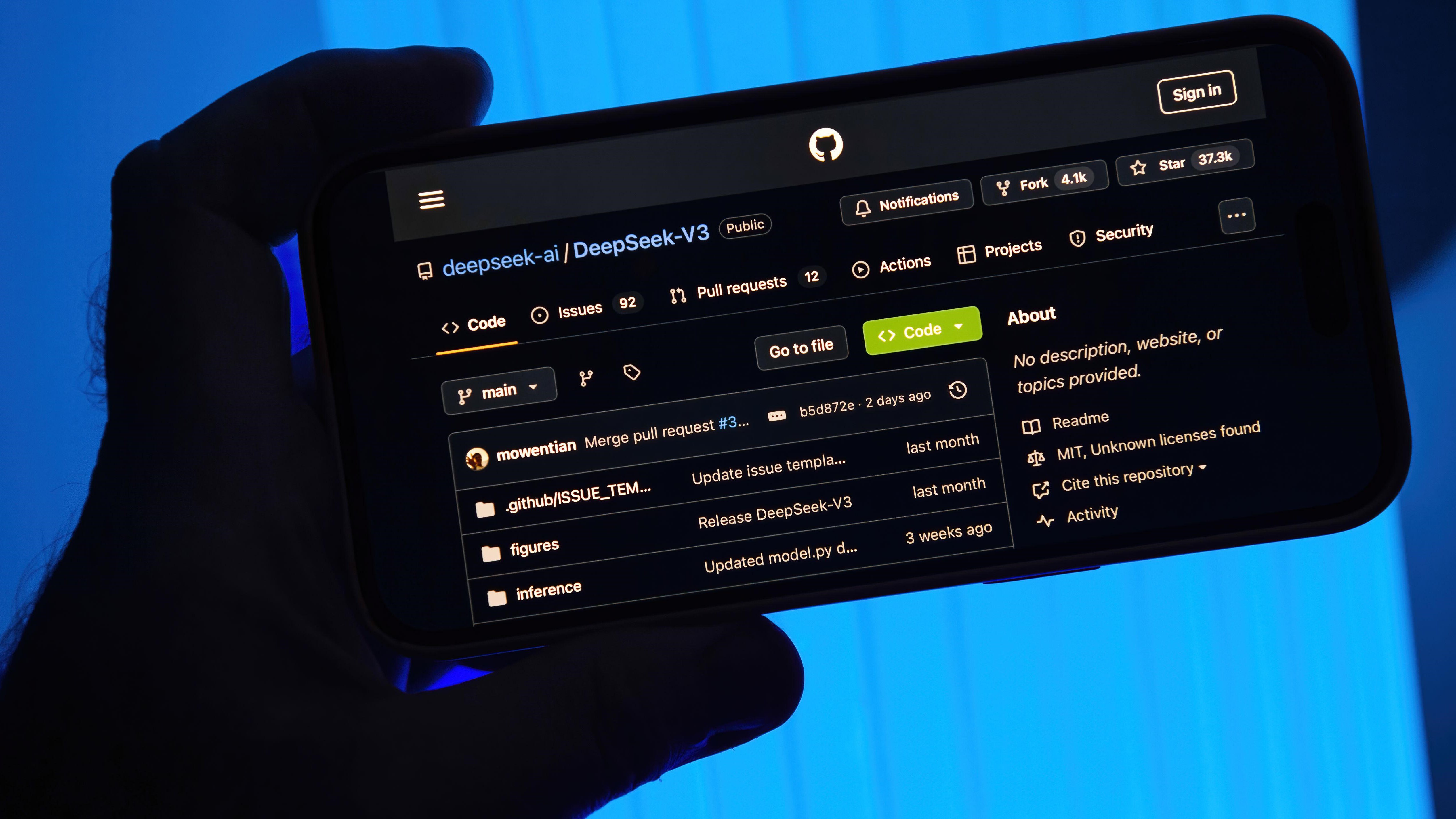

For one thing, DeepSeek (there’s actually two flavors, -V3 and -R1, more on them in a moment) punches well above its weight. DeepSeek is the product of an innovative development process, and freely available to use or modify. It is also indirectly highlighting the way companies in this space like to label their LLM offerings as “open” or “free”, but stop well short of actually making them open source.

The DeepSeek-V3 LLM was developed in China and reportedly cost less than 6 million USD to train. This was possible thanks to developing DualPipe, a highly optimized and scalable method of training the system despite limitations due to export restrictions on Nvidia hardware. Details are in the technical paper for DeepSeek-V3.

There’s also DeepSeek-R1, a chain-of-thought “reasoning” model which handily provides its thought process enclosed within easily-parsed pseudo-tags that are included in its responses. A model like this takes an iterative step-by-step approach to formulating responses, and benefits from prompts that provide a clear goal the LLM can aim for. The way DeepSeek-R1 was created was itself novel. Its training started with supervised fine-tuning (SFT) which is a human-led, intensive process as a “cold start” which eventually handed off to a more automated reinforcement learning (RL) process with a rules-based reward system. The result avoided problems that come from relying too much on RL, while minimizing the human effort of SFT. Technical details on the process of training DeepSeek-R1 are here.

DeepSeek-V3 and -R1 are freely available in the sense that one can access the full-powered models online or via an app, or download distilled models for local use on more limited hardware. It is free and open as in accessible, but not open source because not everything needed to replicate the work is actually released. Like with most LLMs, the training data and actual training code used are not available.

What is released and making waves of its own are the technical details of how researchers produced what they did, and that means there are efforts to try to make an actually open source version. Keep an eye out for Open-R1!