I built "ClickClickClick"

"What's my rating in Uber?" Or Draft a mail to someone@gmail.com and ask for lunch next Saturday. Or Start a 3+2 game on lichess All of these tasks require you to know the UI, like where to find the ratings - "is it in the profile section or is it even there in the app?" and do multiple clicks afterwards. But with this framework, you can just type in plaintext and see the LLM do it for you. How does it work? It has three separate components, as seen below and each have their own separation of concerns: Planner: Plans the next step (given current screenshot and previous actions) Finder: Finds specific UI elements (whatever Planner asks it to find) Executor: Clicks, scrolls, types etc OpenAI / Gemini / Local LLM I have added supports for all of the above, to be precise, the following is the recommended models for each component. You can use your own keys and run it locally, the support for local LLM is what I am most excited about and the Molmo MLX for MacOS is a great start, I feel. Open-sourced This project is open sourced for everyone to use and contribute. You can also check out more demos in the README: https://github.com/BandarLabs/clickclickclick What do you guys think? What are the use cases you can think of for this? For starters, I think it can be used to "create overlays of walkthrough over any app" or "automate testing of any functionality of an app" for developers.

"What's my rating in Uber?"

Or

Draft a mail to someone@gmail.com and ask for lunch next Saturday.

Or

Start a 3+2 game on lichess

All of these tasks require you to know the UI, like where to find the ratings - "is it in the profile section or is it even there in the app?" and do multiple clicks afterwards.

But with this framework, you can just type in plaintext and see the LLM do it for you.

How does it work?

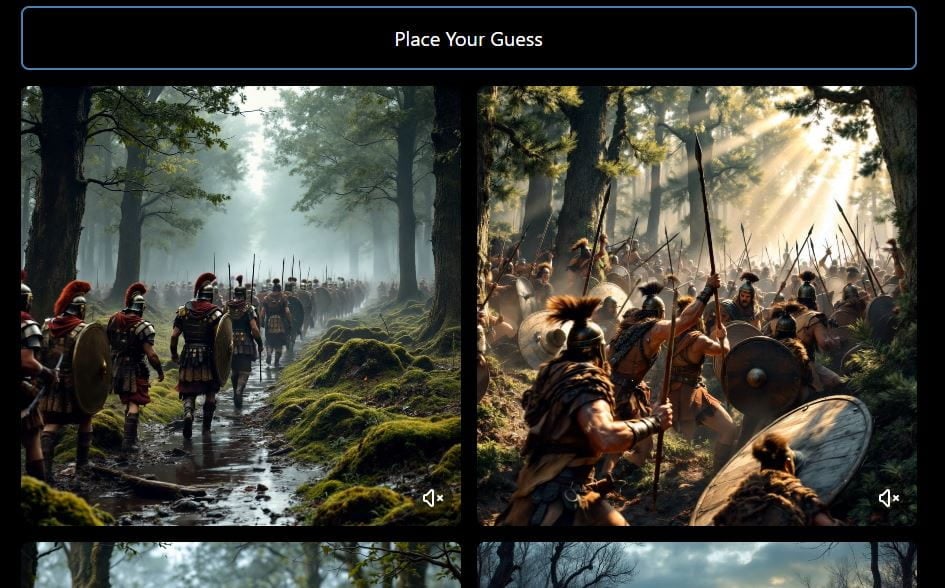

It has three separate components, as seen below and each have their own separation of concerns:

-

Planner: Plans the next step (given current screenshot and previous actions) -

Finder: Finds specific UI elements (whatever Planner asks it to find) -

Executor: Clicks, scrolls, types etc

OpenAI / Gemini / Local LLM

I have added supports for all of the above, to be precise, the following is the recommended models for each component.

You can use your own keys and run it locally, the support for local LLM is what I am most excited about and the Molmo MLX for MacOS is a great start, I feel.

Open-sourced

This project is open sourced for everyone to use and contribute. You can also check out more demos in the README:

https://github.com/BandarLabs/clickclickclick

What do you guys think?

What are the use cases you can think of for this? For starters, I think it can be used to "create overlays of walkthrough over any app" or "automate testing of any functionality of an app" for developers.