How Precision Time Protocol handles leap seconds

We’ve previously described why we think it’s time to leave the leap second in the past. In today’s rapidly evolving digital landscape, introducing new leap seconds to account for the long-term slowdown of the Earth’s rotation is a risky practice that, frankly, does more harm than good. This is particularly true in the data center [...] Read More... The post How Precision Time Protocol handles leap seconds appeared first on Engineering at Meta.

We’ve previously described why we think it’s time to leave the leap second in the past. In today’s rapidly evolving digital landscape, introducing new leap seconds to account for the long-term slowdown of the Earth’s rotation is a risky practice that, frankly, does more harm than good. This is particularly true in the data center space, where new protocols like Precision Time Protocol (PTP) are allowing systems to be synchronized down to nanosecond precision.

With the ever-growing demand for higher precision time distribution, and the larger role of PTP for time synchronization in data centers, we need to consider how to address leap seconds within systems that use PTP and are thus much more time sensitive.

Leap second smearing – a solution past its time

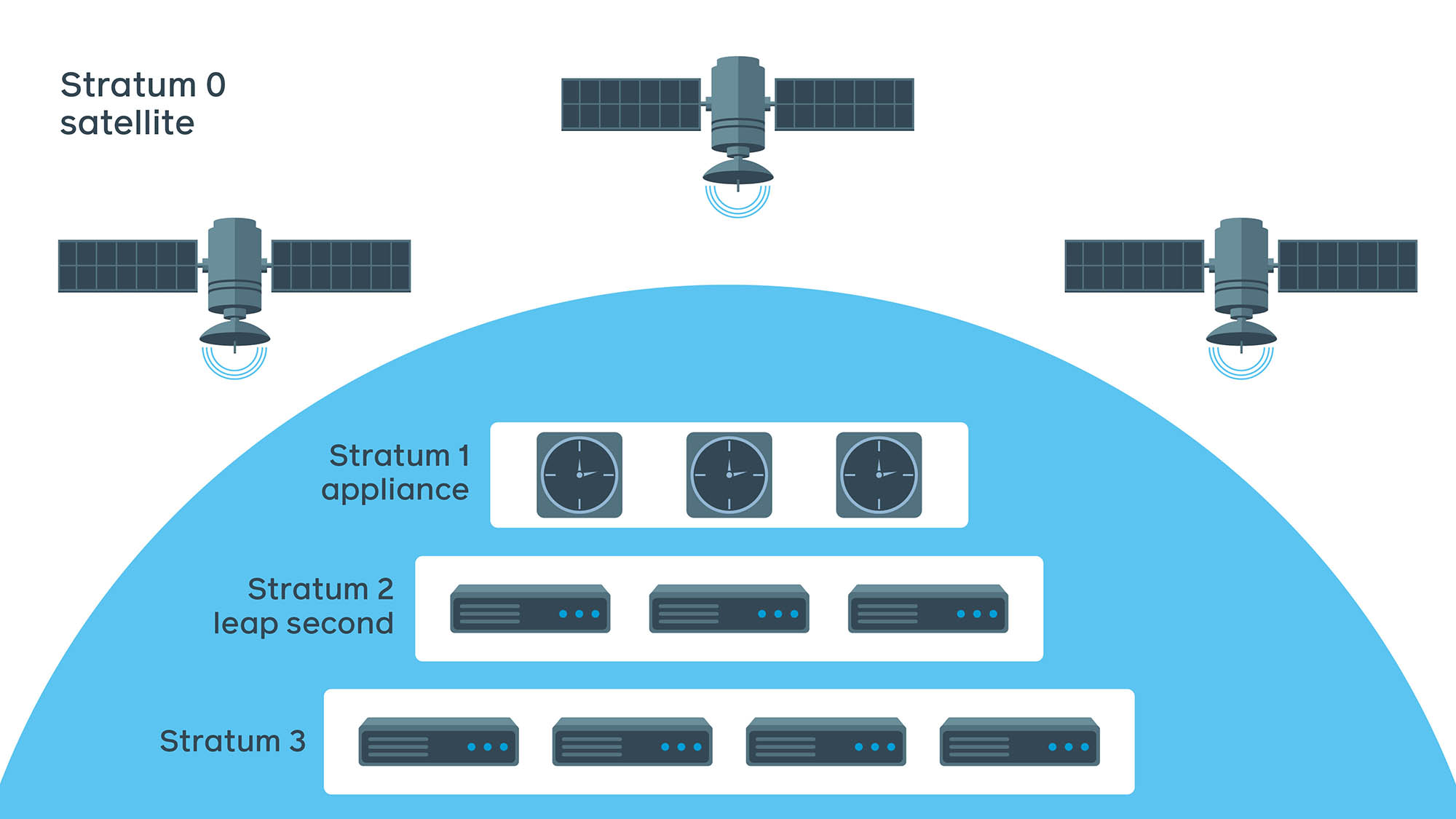

Leap second smearing is a process of adjusting the speeds of clocks to accommodate the correction that has been a common method for handling leap seconds. At Meta, we’ve traditionally focused our smearing effort on NTP since it has been the de facto standard for time synchronization in data centers.

In large NTP deployments, leap second smearing is generally performed at the Stratum 2 (layer), which consists of NTP servers that directly interact with NTP clients (the Stratum 3) that are the downstream users of the NTP service.

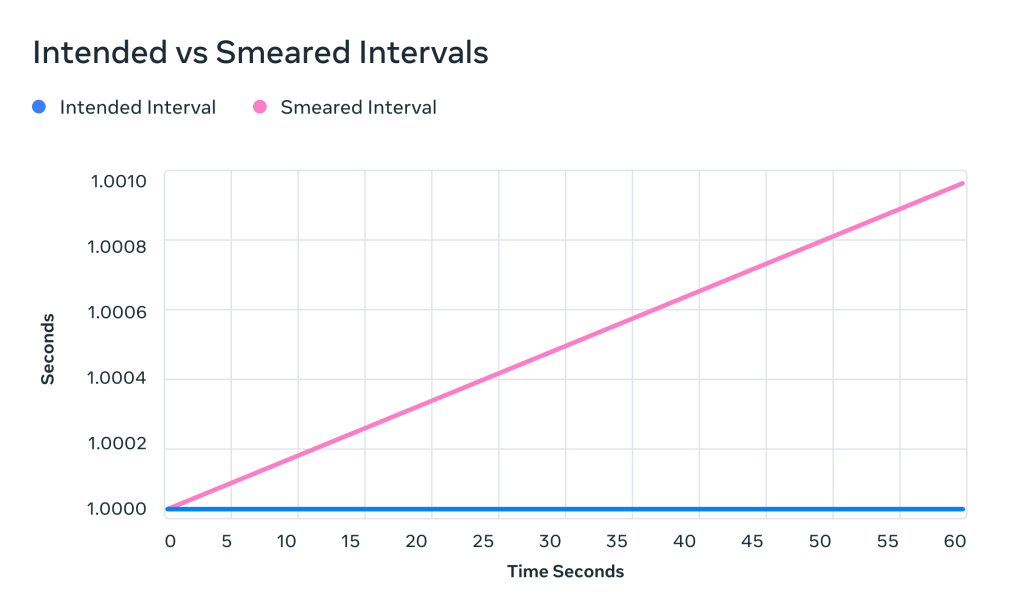

There are multiple approaches to smearing. In the case of NTP, linear or quadratic smearing formulas can be applied.

Quadratic smearing is often preferred due to the layered nature of the NTP protocol, where clients are encouraged to dynamically adjust their polling interval as the value of pending correction increases. This solution has its own tradeoffs, such as inconsistent adjustments, which can lead to different offset values across a large server fleet.

Linear smearing may be superior if an entire fleet is relying on the same time sources and performs smearing at the same time. In combination with more frequent sync cycles of typically once per second, this is a more predictable, precise and reliable approach.

Handling leap seconds in PTP

In contrast to NTP, which synchronizes at the millisecond level, PTP provides a level of precision typically in the range of nanoseconds. At this level of precision even periodic linear smearing would create too much delta across the fleet and violate guarantees provided to the customers.

To handle leap seconds in a PTP environment we take an algorithmic approach that shifts time automatically for systems that use PTP and combine this with an emphasis on using Coordinated Universal Time (UTC) over International Atomic Time (TAI).

Self-smearing

At Meta, users interact with the PTP service via the fbclock library, which provides a tuple of values, {earliest_ns, latest_ns}, which represents a time interval referred to as the Window of Uncertainty (WOU). Each time the library is called during the smearing period we adjust the return values based on the smearing algorithm, which shifts the time values 1 nanosecond every 62.5 microseconds.

This approach has a number of advantages, including being completely stateless and reproducible. The service continues to utilize TAI timestamps but can return UTC timestamps to clients via the API. And, as the start time is determined by tzdata timestamps, the current smearing position can be determined even after a server is rebooted.

This approach does come with some tradeoffs. For example, as the leap smearing strategy differs between the NTP (quadratic) and PTP (linear) ecosystems, services may struggle to match timestamps acquired from different sources during the smearing period.

The difference between two approaches can mean differences of over 100 microseconds, creating challenges for services that consume time from both systems.

UTC over TAI

The smearing strategy we implemented in our fbclock library shows good performance. However, it still introduces significant time deltas between multiple hosts during the smearing period, despite being fully stateless and using small (1 nanosecond) and fixed step sizes.

Another significant drawback comes from periodically running jobs. Smearing time means our scheduling is off by close to 1 millisecond after 60 seconds for services that run at precise intervals.

This is not ideal for a service that guarantees nanosecond-level accuracy and precision.

As a result, we recommend that customers use TAI over UTC and thus avoid having to deal with the leap seconds. Unfortunately, though, in most cases, the conversion to UTC is still required and eventually has to be performed somewhere.

PTP without leap seconds

At Meta, we support the recent push to freeze any new leap seconds after 2035. If we can cease the introduction of new leap seconds, then the entire industry can rely on UTC instead of TAI for higher precision timekeeping. This will simplify infrastructure and remove the need for different smearing solutions.

Ultimately, a future without leap seconds is one where we can push systems to greater levels of timekeeping precision more easily and efficiently.

The post How Precision Time Protocol handles leap seconds appeared first on Engineering at Meta.

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

![Gay Catholic United Flight Attendant Axed After ‘Sex Is Unchangeable’ Remark—Raises Enough Money To Sue [Roundup]](https://viewfromthewing.com/wp-content/uploads/2025/02/DALL·E-2025-02-03-08.46.00-A-high-contrast-digital-montage-combining-aviation-Catholic-symbolism-and-legal-imagery___-Central-figure_-A-stern-looking-male-flight-attendant-in.webp?#)