Extracting Structured Vehicle Data from Images

Build an Automated Vehicle Documentation System that Extracts Structured Information from Images, using OpenAI API, LangChain and Pydantic.Image was generated by author on PicLumenIntroductionImagine there is a camera monitoring cars at an inspection point, and your mission is to document complex vehicle details — type, license plate number, make, model and color. The task is challenging — classic computer vision methods struggle with varied patterns, while supervised deep learning requires integrating multiple specialized models, extensive labeled data, and tedious training. Recent advancements in the pre-trained Multimodal LLMs (MLLMs) field offer fast and flexible solutions, but adapting them for structured outputs requires adjustments.In this tutorial, we’ll build a vehicle documentation system that extracts essential details from vehicle images. These details will be extracted in a structured format, making it accessible for further downstream use. We’ll use OpenAI’s GPT-4 to extract the data, Pydantic to structure the outputs, and LangChain to orchestrate the pipeline. By the end, you’ll have a practical pipeline for transforming raw images into structured, actionable data.This tutorial is aimed at computer vision practitioners, data scientists, and developers who are interested in using LLMs for visual tasks. The full code is provided in an easy-to-use Colab notebook to help you follow along step-by-step.Technology StackGPT-4 Vision Model: GPT-4 is a multimodal model developed by OpenAI, capable of understanding both text and images [1]. Trained on vast amounts of multimodal data, it can generalize across a wide variety of tasks in a zero-shot manner, often without the need for fine-tuning. While the exact architecture and size of GPT-4 have not been publicly disclosed, its capabilities are among the most advanced in the field. GPT-4 is available via the OpenAI API on a paid token basis. In this tutorial, we use GPT-4 for its excellent zero-shot performance, but the code allows for easy swapping with other models based on your needs.LangChain: For building the pipeline, we will use LangChain. LangChain is a powerful framework that simplifies complex workflows, ensures consistency in the code, and makes it easy to switch between LLM models [2]. In our case, Langchain will help us to link the steps of loading images, generating prompts, invoking the GPT model, and parsing the output into structured data.Pydantic: Pydantic is a powerful library for data validation in Python [3]. We’ll use Pydantic to define the structure of the expected output from the GPT-4 model. This will help us ensure that the output is consistent and easy to work with.Dataset OverviewTo simulate data from a vehicle inspection checkpoint, we’ll use a sample of vehicle images from the ‘Car Number plate’ Kaggle dataset [4]. This dataset is available under the Apache 2.0 License. You can view the images below:Vehicle images from Car Number plate’ Kaggle datasetLets Code!Before diving into the practical implementation, we need to take care of some preparations:Generate an OpenAI API key— The OpenAI API is a paid service. To use the API, you need to sign up for an OpenAI account and generate a secret API key linked to the paid plan (learn more).Configure your OpenAI — In Colab, you can securely store your API key as an environment variables (secret), found on the left sidebar (

Build an Automated Vehicle Documentation System that Extracts Structured Information from Images, using OpenAI API, LangChain and Pydantic.

Introduction

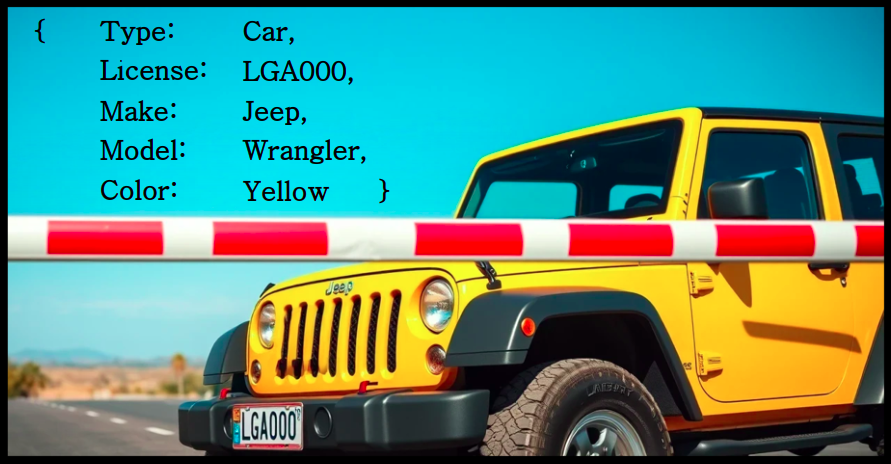

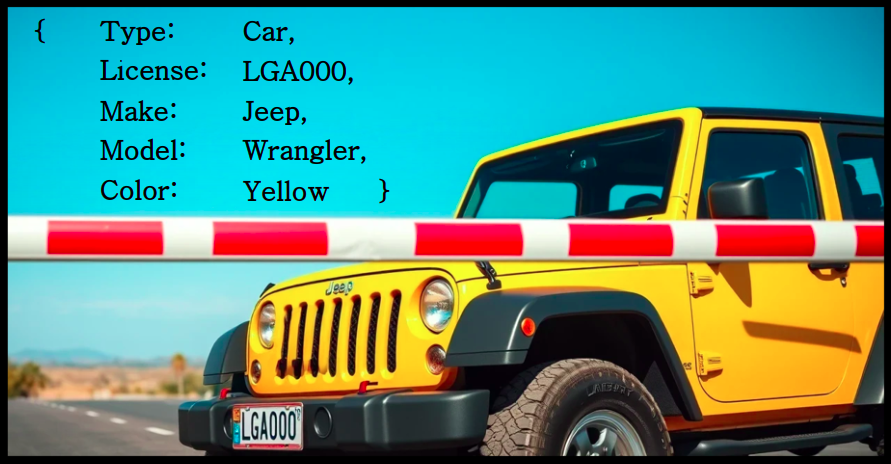

Imagine there is a camera monitoring cars at an inspection point, and your mission is to document complex vehicle details — type, license plate number, make, model and color. The task is challenging — classic computer vision methods struggle with varied patterns, while supervised deep learning requires integrating multiple specialized models, extensive labeled data, and tedious training. Recent advancements in the pre-trained Multimodal LLMs (MLLMs) field offer fast and flexible solutions, but adapting them for structured outputs requires adjustments.

In this tutorial, we’ll build a vehicle documentation system that extracts essential details from vehicle images. These details will be extracted in a structured format, making it accessible for further downstream use. We’ll use OpenAI’s GPT-4 to extract the data, Pydantic to structure the outputs, and LangChain to orchestrate the pipeline. By the end, you’ll have a practical pipeline for transforming raw images into structured, actionable data.

This tutorial is aimed at computer vision practitioners, data scientists, and developers who are interested in using LLMs for visual tasks. The full code is provided in an easy-to-use Colab notebook to help you follow along step-by-step.

Technology Stack

- GPT-4 Vision Model: GPT-4 is a multimodal model developed by OpenAI, capable of understanding both text and images [1]. Trained on vast amounts of multimodal data, it can generalize across a wide variety of tasks in a zero-shot manner, often without the need for fine-tuning. While the exact architecture and size of GPT-4 have not been publicly disclosed, its capabilities are among the most advanced in the field. GPT-4 is available via the OpenAI API on a paid token basis. In this tutorial, we use GPT-4 for its excellent zero-shot performance, but the code allows for easy swapping with other models based on your needs.

- LangChain: For building the pipeline, we will use LangChain. LangChain is a powerful framework that simplifies complex workflows, ensures consistency in the code, and makes it easy to switch between LLM models [2]. In our case, Langchain will help us to link the steps of loading images, generating prompts, invoking the GPT model, and parsing the output into structured data.

- Pydantic: Pydantic is a powerful library for data validation in Python [3]. We’ll use Pydantic to define the structure of the expected output from the GPT-4 model. This will help us ensure that the output is consistent and easy to work with.

Dataset Overview

To simulate data from a vehicle inspection checkpoint, we’ll use a sample of vehicle images from the ‘Car Number plate’ Kaggle dataset [4]. This dataset is available under the Apache 2.0 License. You can view the images below:

Lets Code!

Before diving into the practical implementation, we need to take care of some preparations:

- Generate an OpenAI API key— The OpenAI API is a paid service. To use the API, you need to sign up for an OpenAI account and generate a secret API key linked to the paid plan (learn more).

- Configure your OpenAI — In Colab, you can securely store your API key as an environment variables (secret), found on the left sidebar (

![From Gas Station to Google with Self-Taught Cloud Engineer Rishab Kumar [Podcast #158]](https://cdn.hashnode.com/res/hashnode/image/upload/v1738339892695/6b303b0a-c99c-4074-b4bd-104f98252c0c.png?#)