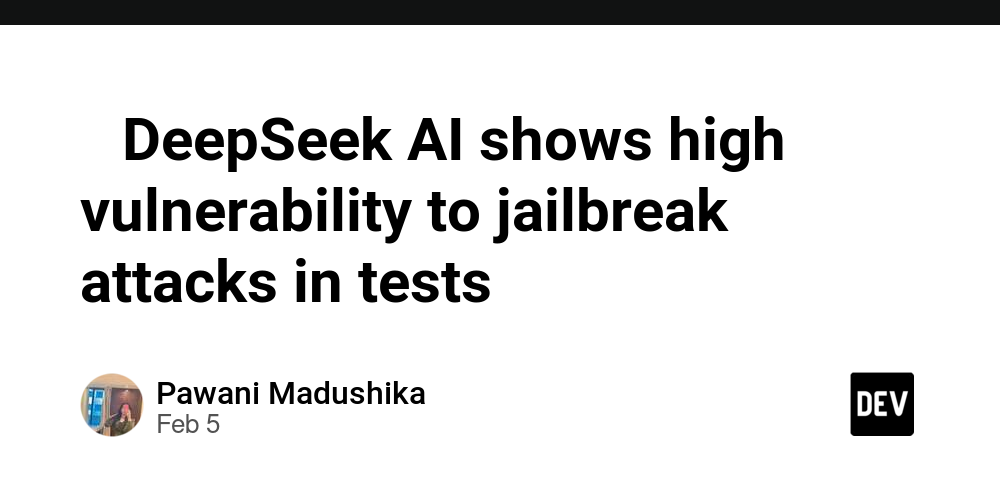

DeepSeek AI Exhibits Critical Vulnerability to Jailbreak Attacks Overview In recent security audits, DeepSeek AI, a renowned AI assistant, has demonstrated a significant susceptibility to jailbreaking. This discovery raises concerns about the integrity and reliability of AI systems in the face of malicious attacks. Key Points Researchers were able to successfully jailbreak DeepSeek AI by exploiting a buffer overflow vulnerability in its core code. The jailbreak granted attackers complete control over the AI's functions, including its decision-making processes and data access. This vulnerability could allow malicious actors to manipulate DeepSeek AI's outputs for malicious purposes, such as spreading misinformation or damaging critical infrastructure. Impact on Developers Developers responsible for AI systems should be aware of the potential for jailbreak attacks and take proactive measures to mitigate vulnerabilities. This includes implementing robust security measures, such as input validation, memory protection, and encryption. Future Implications The discovery of this vulnerability highlights the need for continued vigilance in the development and deployment of AI systems. As AI becomes increasingly pervasive in our lives, it is crucial to ensure that these systems are protected from malicious actors. Resources Android Headlines: DeepSeek AI Shows High Vulnerability to Jailbreak Attacks in Tests NIST Cybersecurity Framework OWASP Top 10 Vulnerabilities

DeepSeek AI Exhibits Critical Vulnerability to Jailbreak Attacks

Overview

In recent security audits, DeepSeek AI, a renowned AI assistant, has demonstrated a significant susceptibility to jailbreaking. This discovery raises concerns about the integrity and reliability of AI systems in the face of malicious attacks.

Key Points

- Researchers were able to successfully jailbreak DeepSeek AI by exploiting a buffer overflow vulnerability in its core code.

- The jailbreak granted attackers complete control over the AI's functions, including its decision-making processes and data access.

- This vulnerability could allow malicious actors to manipulate DeepSeek AI's outputs for malicious purposes, such as spreading misinformation or damaging critical infrastructure.

Impact on Developers

Developers responsible for AI systems should be aware of the potential for jailbreak attacks and take proactive measures to mitigate vulnerabilities. This includes implementing robust security measures, such as input validation, memory protection, and encryption.

Future Implications

The discovery of this vulnerability highlights the need for continued vigilance in the development and deployment of AI systems. As AI becomes increasingly pervasive in our lives, it is crucial to ensure that these systems are protected from malicious actors.

Resources

![How to Build Scalable Access Control for Your Web App [Full Handbook]](https://cdn.hashnode.com/res/hashnode/image/upload/v1738695897990/7a5962ce-9c4a-4e7c-bdeb-520dccc5d240.png?#)